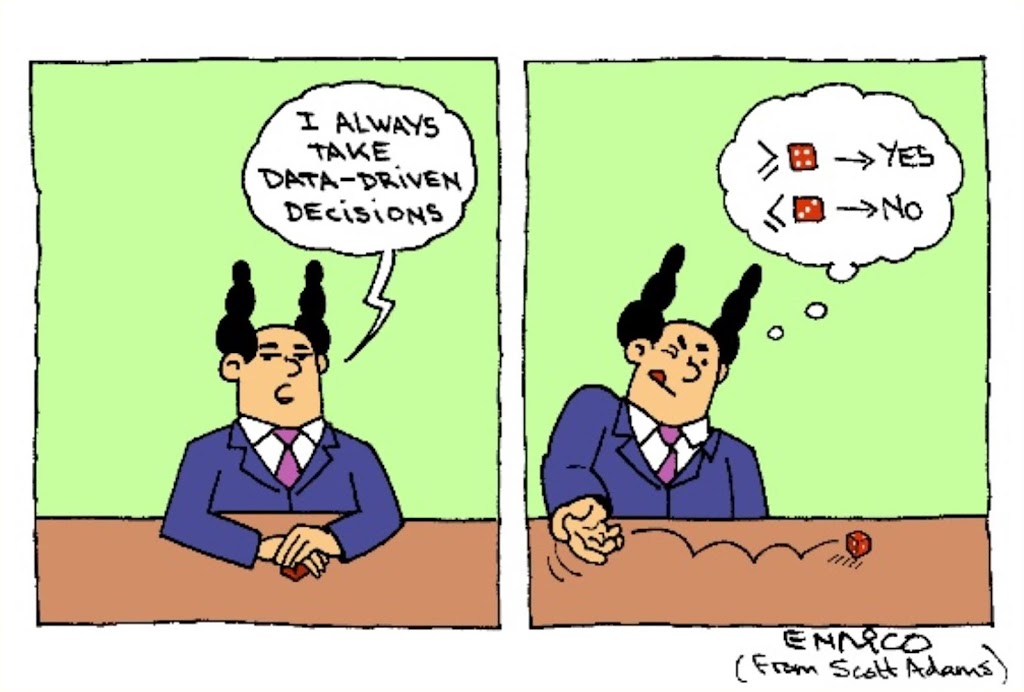

With an increasing availability of data and off-the-shelf analysis tools, interventions are thriving.

Interventions rarely create value. Rarity is expected simply because the probability of noise is often disproportionately higher. However, larger amounts of data exacerbate the problem of finding value in interventions while none exists. E.g., a frequentist test using a 0.01 p-value threshold would justify an intervention if the probability of an effect occurring by chance is less than 1%. This probability gets smaller with more data, not because the intervention gains value*. 1% should be a moving target, but it is often treated as a fixed one. It should be adjusted also for other reasons, such as running multiple tests.

More importantly, it should be adjusted for unintended consequences. While quantifying the consequences is difficult, we can incentivize analytics teams for finding out what not to do. Action is visible but inaction is not. Successful data centric companies should not mistake thoughtful inaction for idleness. On the contrary, they should encourage and reward it.

*Assuming the actual effect is not zero. Valid for most (if not all) problems outside natural sciences.