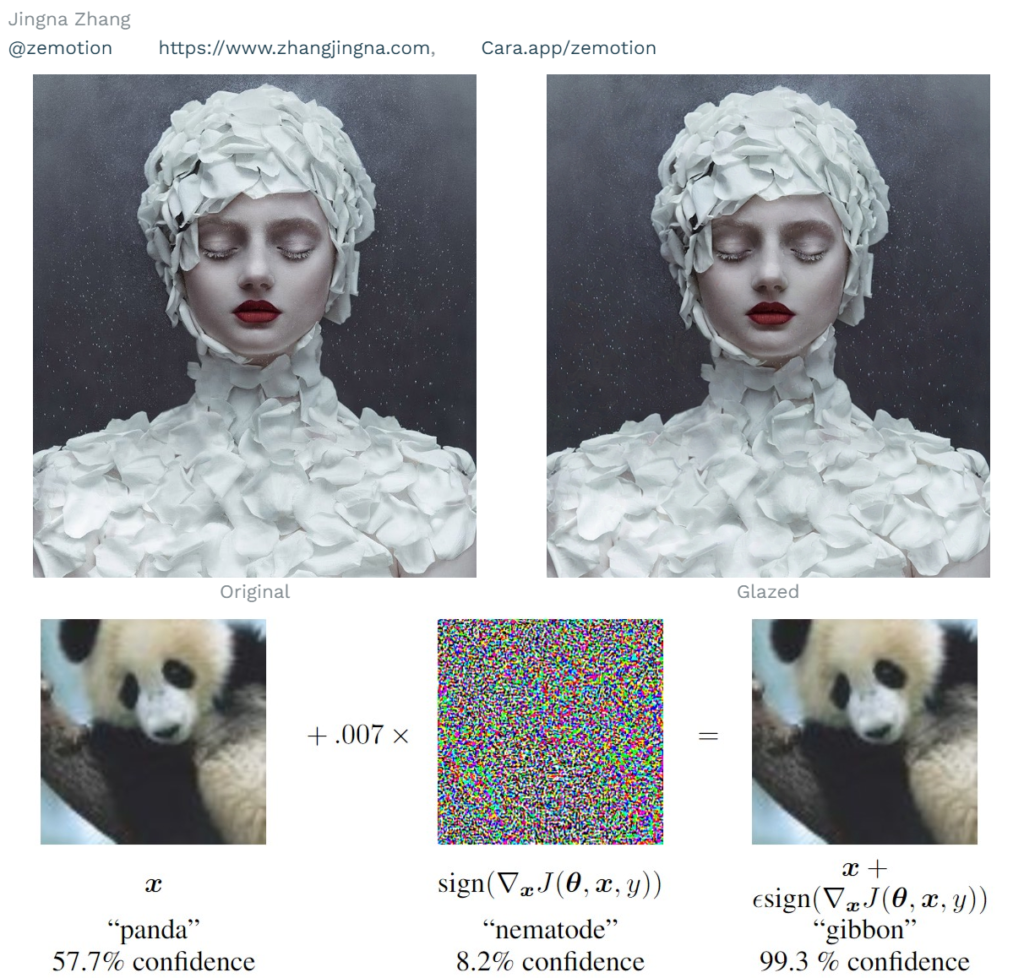

To humans, the answer is undoubtedly yes. To algorithms, they could easily be two completely different images, if not mistaken in their characteristics. The image on the right is the 𝘨𝘭𝘢𝘻𝘦𝘥 version of the original image on the left.

Glazed is a product of the SAND Lab at the University of Chicago that helps artists protect their art from generative AI companies. Glaze adds noise to artwork that is invisible to the human eye but misleading to the algorithm.

Glaze is free to use, but understandably not open source, so as not to give art thieves an advantage in adaptive responses in this cat-and-mouse game.

The idea is similar to the adversarial attack famously discussed in Goodfellow et al. (2015), where a panda predicted with low confidence becomes a sure gibbon to the algorithm after adversarial noise is added to the image.

I heard about this cool and useful project a while ago and have been meaning to help spread the word. In the words of the researchers:

Glaze is a system designed to protect human artists by disrupting style mimicry. At a high level, Glaze works by understanding the AI models that are training on human art, and using machine learning algorithms, computing a set of minimal changes to artworks, such that it appears unchanged to human eyes, but appears to AI models like a dramatically different art style. For example, human eyes might find a glazed charcoal portrait with a realism style to be unchanged, but an AI model might see the glazed version as a modern abstract style, a la Jackson Pollock. So when someone then prompts the model to generate art mimicking the charcoal artist, they will get something quite different from what they expected.

The sample artwork is by Jingna Zhang.