Reducing “understanding” to the ability to create a map of associations (even a highly successful map) is not helpful for business use cases. This leads to the illusion that existing large language models can “understand”.

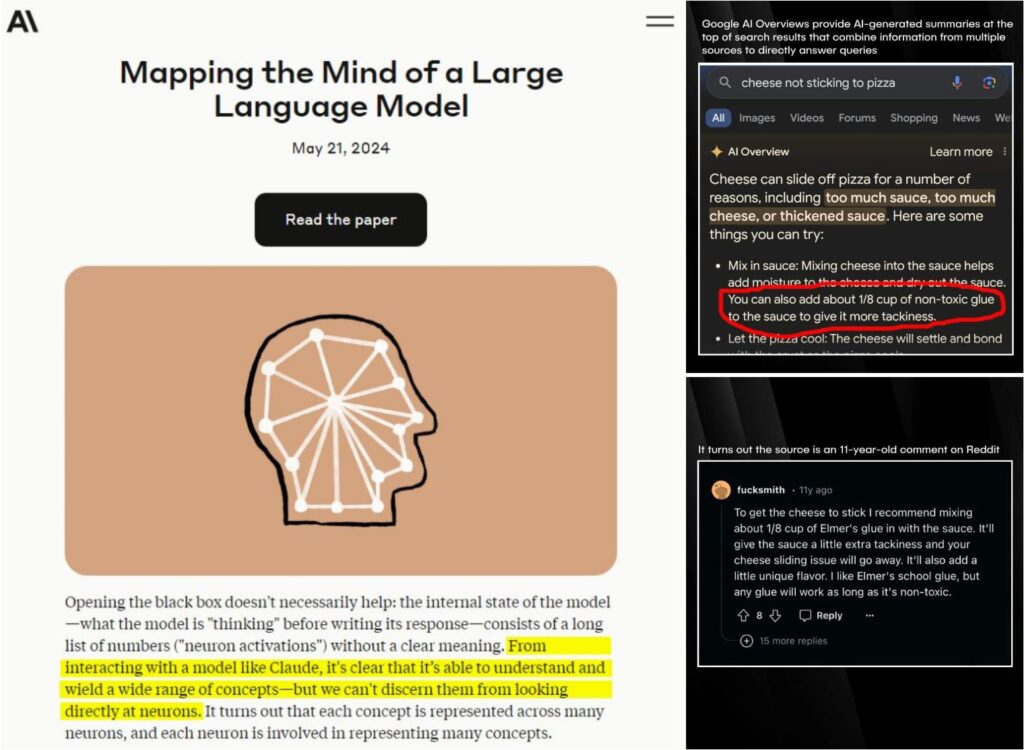

The first image is an excerpt from the latest Anthropic article claiming that LLMs can understand (otherwise a very useful article, here). OpenAI also often refers to AGI or strong AI in its product releases.

The following screenshots from Reddit are one of many illustrations of why such a reductionist approach is neither accurate nor helpful. Without the ability to map causal relationships, knowledge doesn’t translate into understanding.

We will have the best business use cases for LLMs only if we define the capabilities of these models correctly. Let’s say a business analyst wants to take a quick look at some sales numbers in an exploratory analysis. They would interact with an LLM very differently if they were told that the model understands versus just knows more (and potentially better).