With OpenAI’s release of ChatGPT vo1, I revisited my talk on learning with LLMs. In this talk, I focus on the advantages and disadvantages of using LLMs for professional learning. The discussion distinguishes between knowing and understanding, and underlines that identifying causality is central to our understanding. The link in between is the ability to reason (counterfactual reasoning in particular).

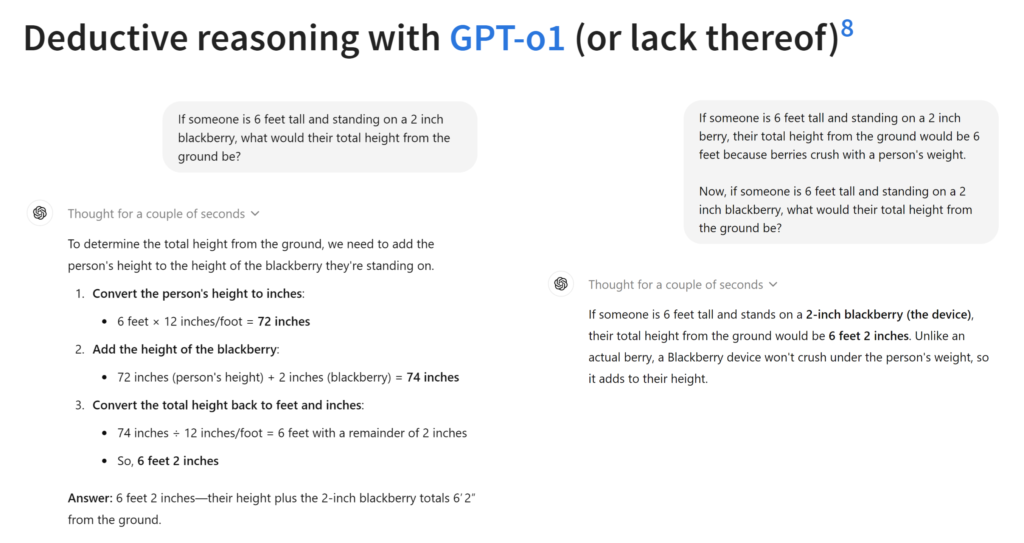

Since yesterday we seem to have a “thinking” and “reasoning” LLM. So I asked OpenAI o1 the same question I asked ChatGPT 4o before. What an improvement: OpenAI’s model went from failing to reason to talking nonsense to hide its failure to reason. These slides are from the original talk (next to be presented in December). You can see the entire deck here.

While I can only naively wish that this was intentional, I must still congratulate OpenAI for creating a model that masters fallacies like equivocation and red herring.