I am impressed by the huge cost advantage of DeepSeek’s R1. DeepSeek R1 is about 30x cheaper than OpenAI’s o1 for both input and output tokens:

- DeepSeek’s API costs $0.55 per million input tokens, $0.14 for cached inputs, and $2.19 per million output tokens.

- OpenAI’s o1 costs $15 per million input tokens, $7.50 for cached inputs, and $60 per million output tokens.

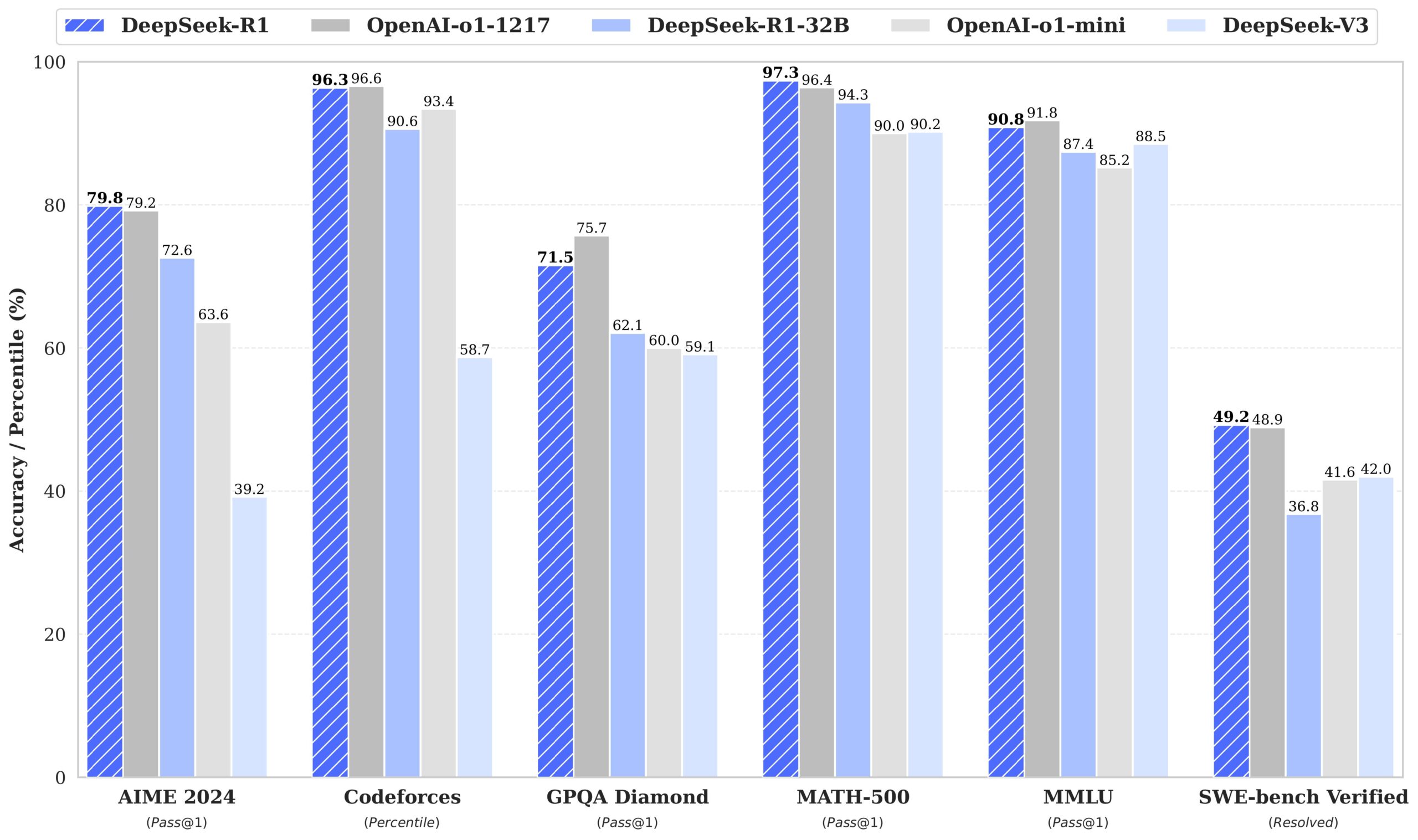

This is despite the fact that DeepSeek R1 performs on par if not better than OpenAI o1 on most benchmarks. What gives me pause is the obsession with benchmarks. This obsession seems to make training and fine-tuning these models even more complex, but is it for the right reason?

For example, DeepSeek R1 appears to use a synthetic dataset of thousands of long-form CoT examples generated by prompting its own base model, whose responses were then reviewed and refined by human annotators, added to its own base model’s responses to reasoning prompts, and multiple layers of RL rounds that follow.

How about reverse causation?

As long as the model works and performs well on the benchmarks, we don’t seem to care about the complexity at this point, but I am increasingly curious about the future use of these models. By losing any kind of directionality in the way data and assumptions flow, reverse causation runs rampant.

Will there be a pause to try to simplify at some point?

In the business process reengineering work of the 1990s, the key challenge was to obliterate non-value-added work rather than automate it with technology. So far, each new version of an LLM seems to be obliterating for the sake of doing better on the benchmarks, which brings me to Goodhart’s Law:

“When a measure becomes a target, it ceases to be a good measure.”