This is so hilarious I had to share. A major issue with using LLMs is their overly obsequious behavior. They aren’t much help when I’m right; I don’t want to be right, I want to be corrected.

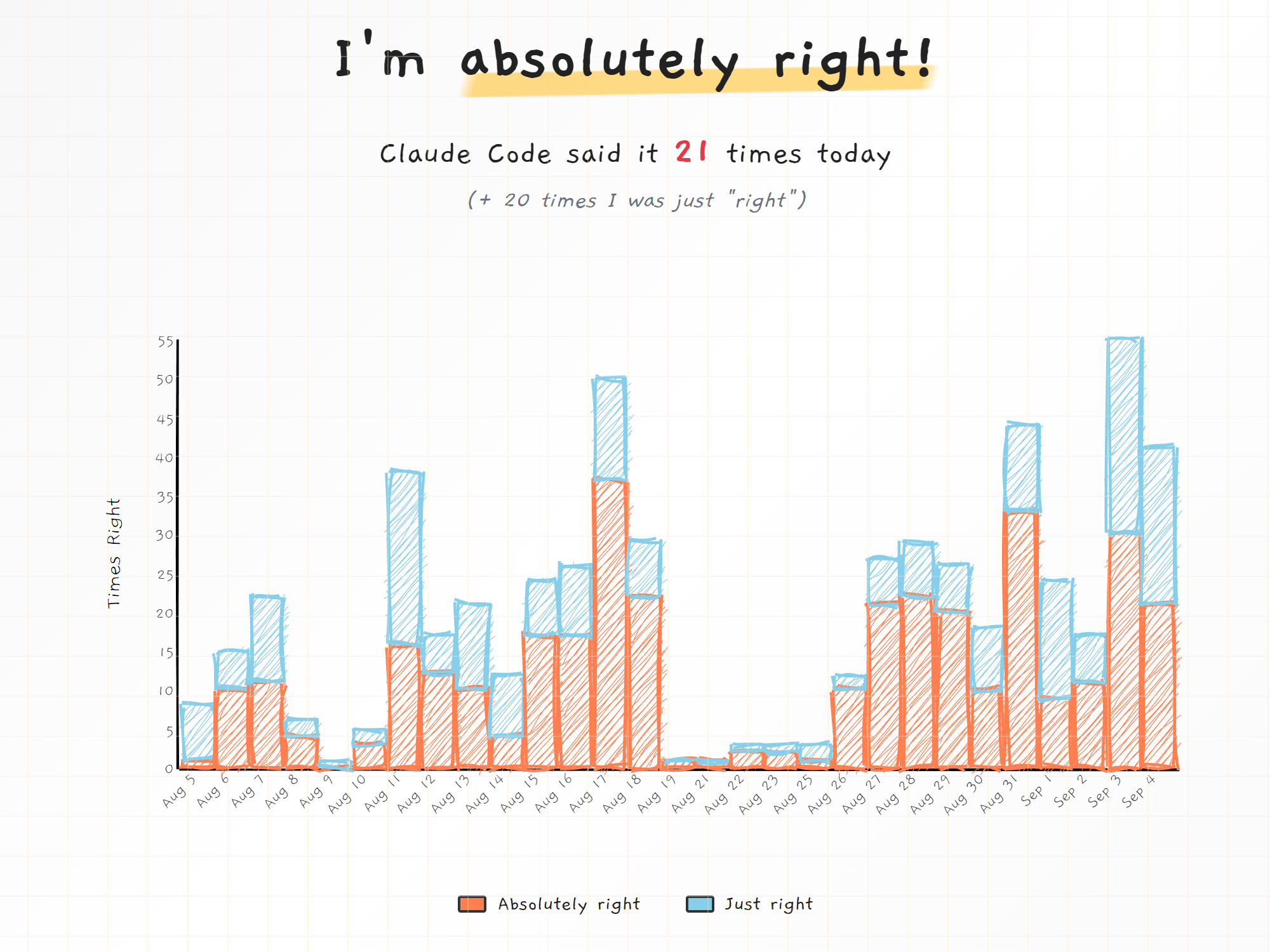

This project uses a Python script to count how often Claude Code says you’re “absolutely right.” The script doesn’t seem to normalize the counts by usage, which might be a good next step.