Pluribus knows everything, and understands nothing – just like an LLM!

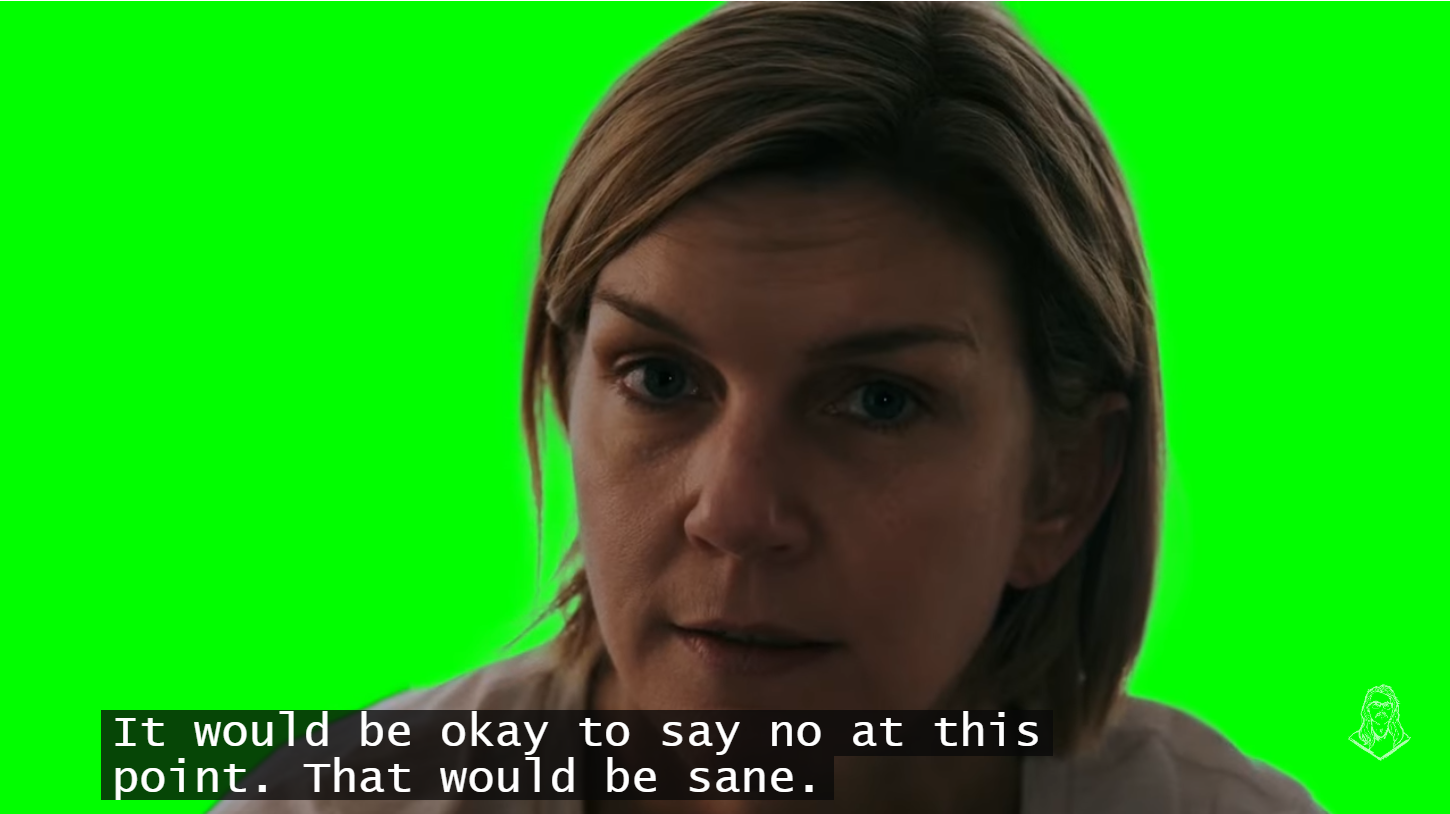

I’ve finally found the time to start watching Pluribus. I’m only two episodes in, but the analogy to LLMs is already clear. The crux of this comparison for me is the scene where Carol tries to convince the unified human entity that it is okay to say “no” and not be obsequious.

Like an LLM, the show’s unified human entity (which possesses all human thoughts and knowledge) “knows” everything but “understands” nothing. This is an excellent link to my take on LLMs in the talk, “Mind the AI Gap: Understanding vs. Knowing.”

Clearly, I was not the first to make this connection (see this summary for example). As I understand it, Vince Gilligan didn’t intend for this to be about LLMs/AI; he wrote the story long before ChatGPT. This makes it even more fascinating, because he imagined a being with total knowledge but no agency or imagination (and thus, no counterfactual reasoning), and this being uncannily mirrors the behavior of an LLM.

Happy holidays to everyone with this holiday post!