After an unexpected hiatus, I’m pleased to announce the early release of a long overdue project: Causal Book: Design Patterns in Causal Inference.

I started this project some time ago, but never had a chance to devote time to scoping it. I finally got around to it, and the first chapter is almost done. I keep going back to it, so it might change a little more along the way.

This is an accessible, interactive book for the data science / causal inference audience. Some chapters should also read well to the business audience.

The book is not meant to substitute for the already great accessible books out there. The two that come to mind are The Effect and The Mixtape. Kudos to Nick and Scott for these great resources.

Our goal here is to complement what’s out there by using the idea of design patterns:

(1) focus on solutions to problem patterns and their code implementations in R and Python,

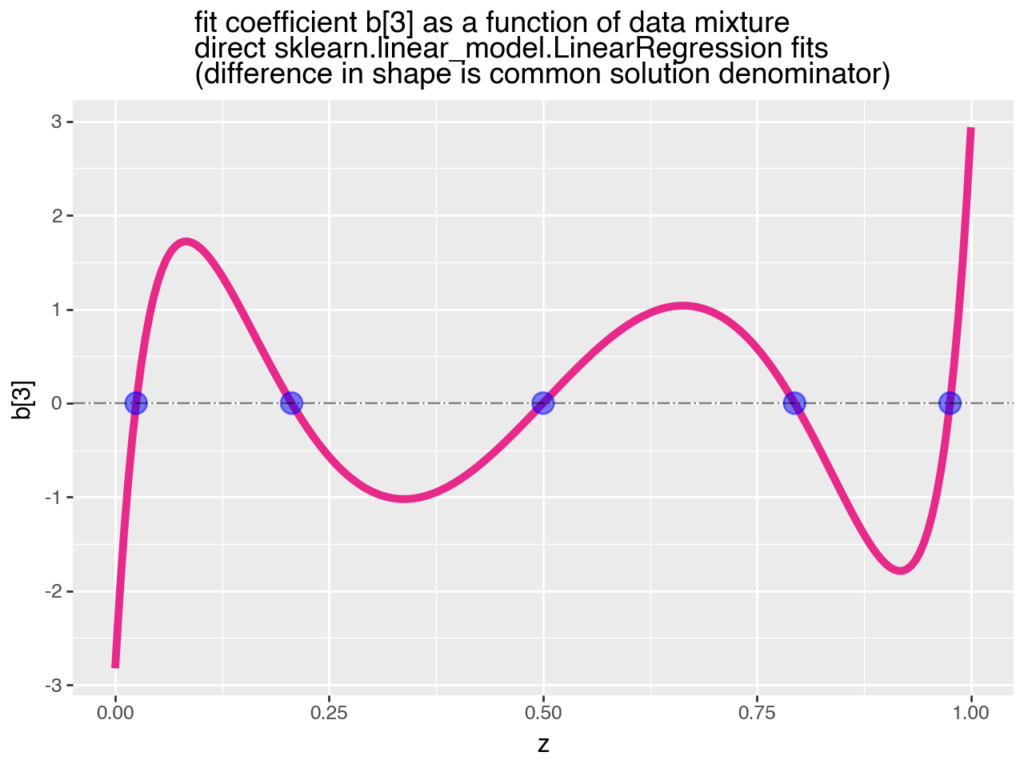

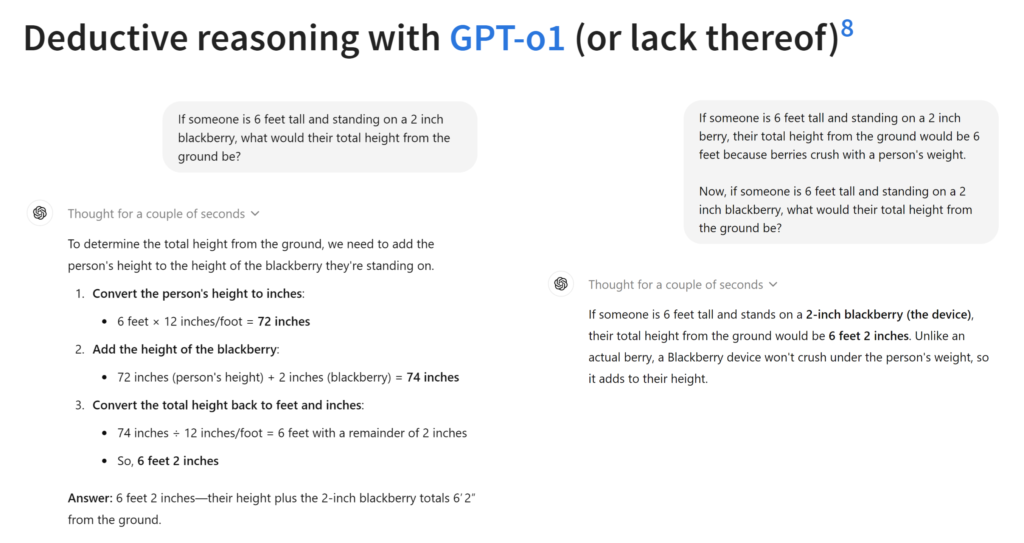

(2) discuss the implications of different approaches to the same problem solved by modeling the same data,

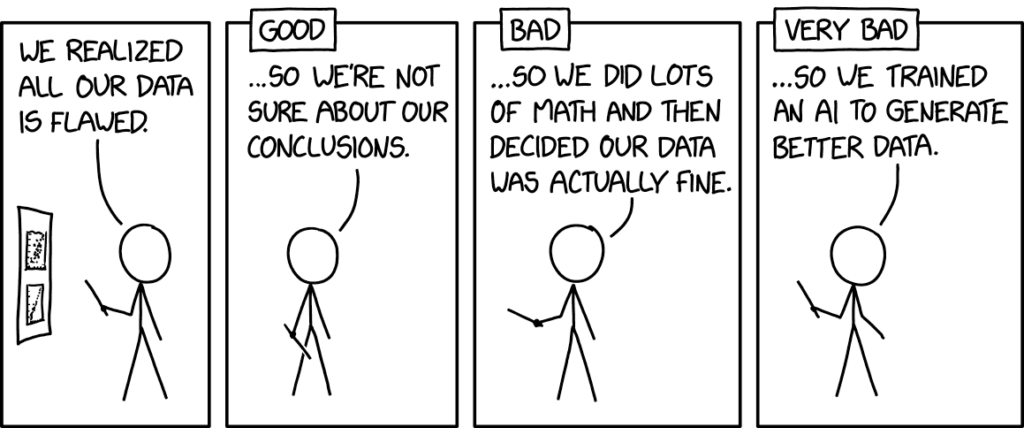

(3) explain some of the surprising (or seemingly surprising) challenges in applying the causal design patterns.

It’s a work in progress, but now that it’s scoped, more is on the way. Versioning and references are up next. I will post updates along the way.

Finally, why design patterns? Early in my career, I was a programmer using C# and then Java. Our most valuable resources back then were design patterns. I still have a copy of the book Head First Java Design Patterns on my bookshelf from 20 years ago. It was a lifesaver when I moved from C# to Java. This is a tribute to those days.