“AI in 64 Pictures”

A visual journey

(Updated in July, 2025)

Paul College of Business and Economics

June, 2025

“AI” in 64 Pictures1

“AI” in title is misleading. This is a visual journey of language processing.

The slides are inspired by various sources, including Jay Alammar, Chris McCormick, and Lena Voita. Images are sourced from the original authors’ websites and other online resources. References are included in the slides.

The deck is intentionally image-heavy, with minimal text, to support an interactive format and facilitate additional insights in a discussion.

- PSA: Click/tap small images to open them in a full-sized gallery view.

This deck originally served as a prologue to “Mind the AI Gap: Understanding vs. Knowing,” a talk about the role of AI in learning.

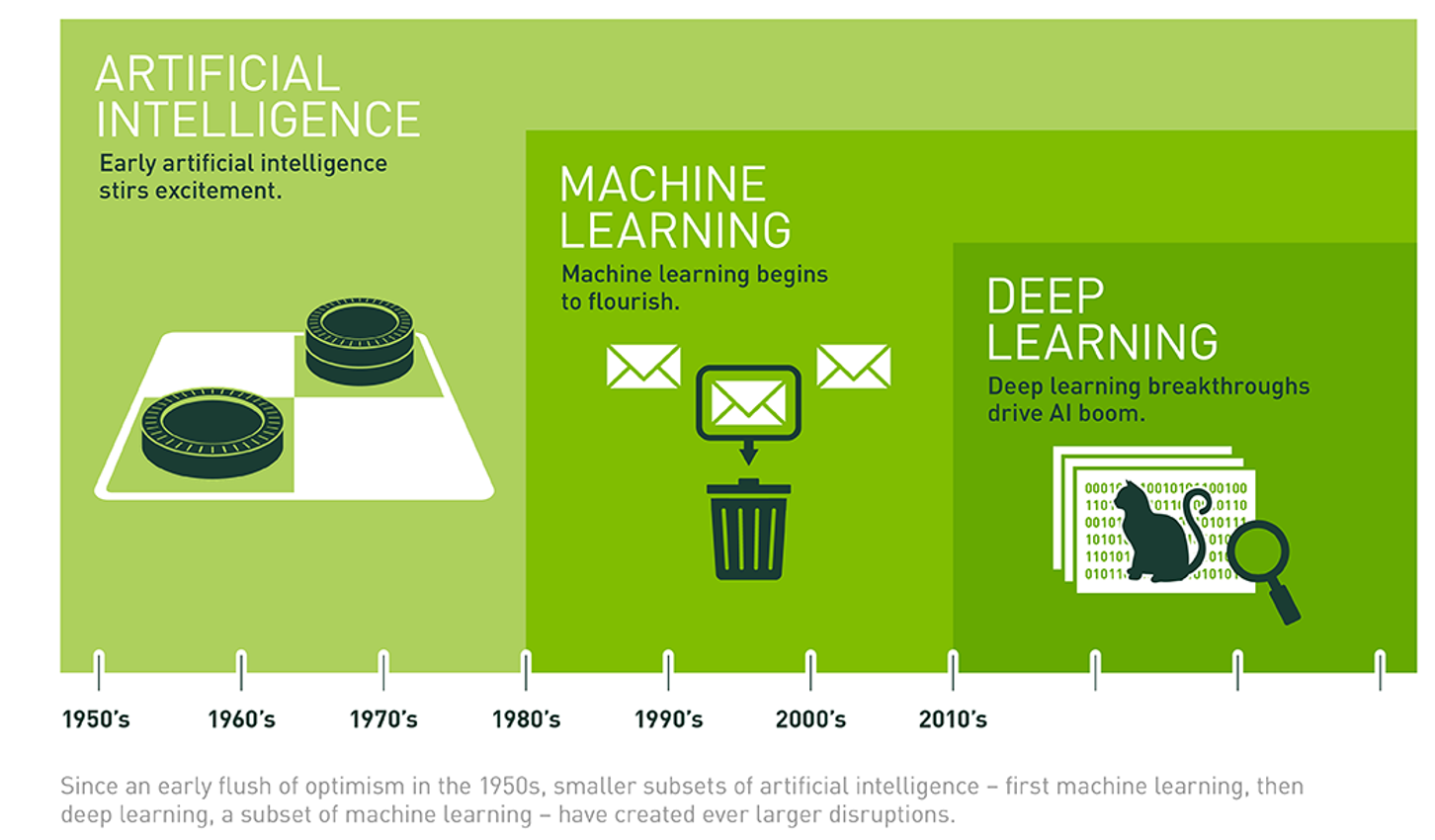

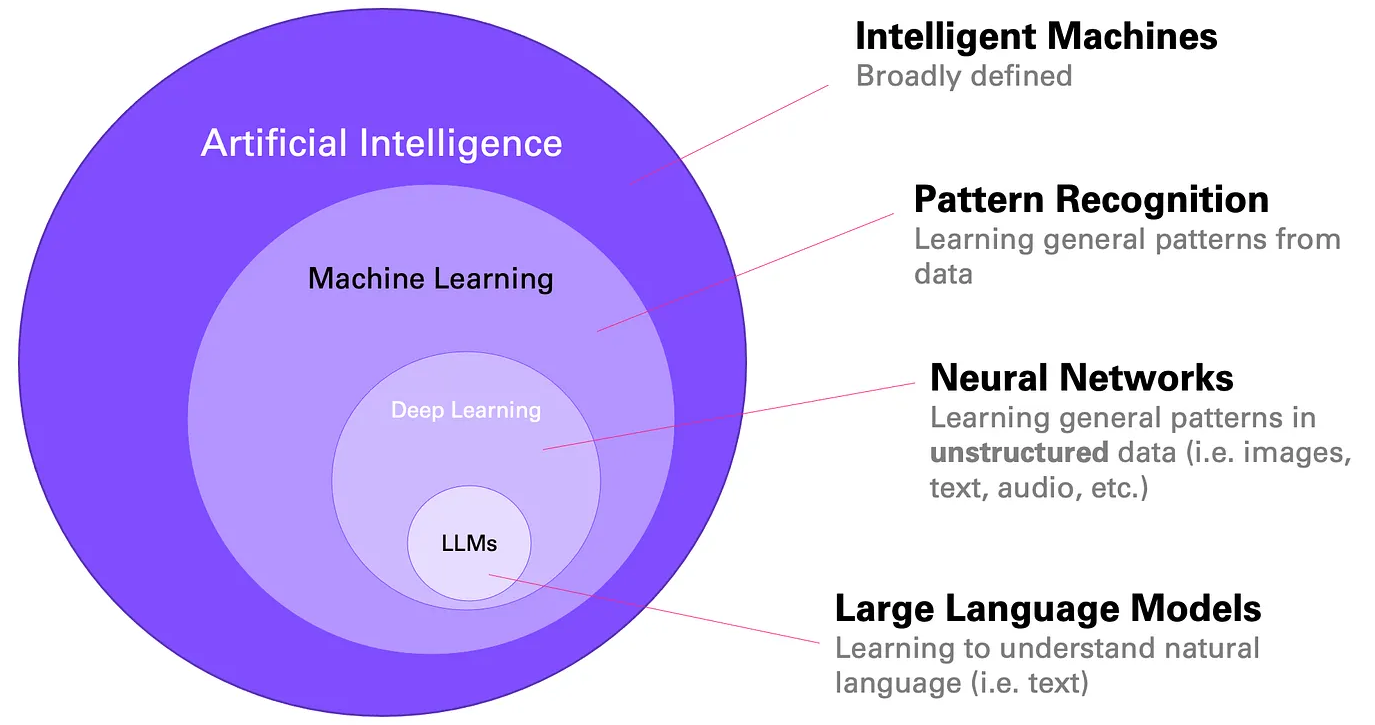

What is AI? (1/2)

Figure 1: NVIDIA Glossary - “Machine Learning”

What is AI? (2/2)

Figure 2: Microsoft Research - “How Large Language Models work”

So using “AI” to refer to “LLMs” is a very limited view.

But popularity wins.

What’s Inside (More or Less)

Early days of modern NLP: Word Embeddings and RNNs

Understanding the quantification of text: Word2Vec

Using word vector embeddings in RNNs

Adding “attention” to RNNs

Small picture: From RNNs to transformers

- Keep the attention, drop the RNNs

Big picture: The Transformer explained

From prompting to asking

Prompting for results

Asking for results: Agents

I. EARLY DAYS (2010s!)

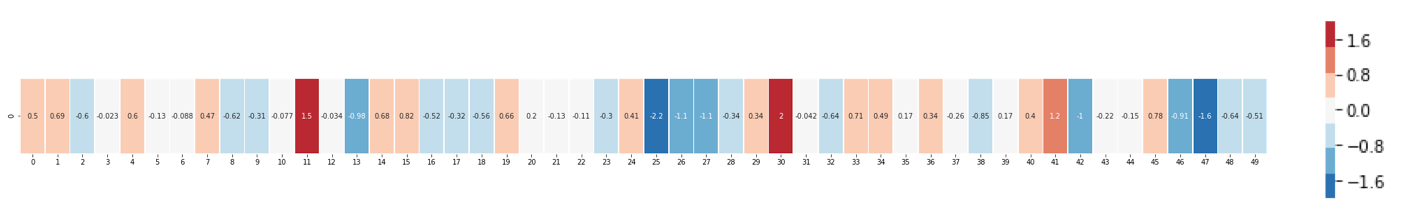

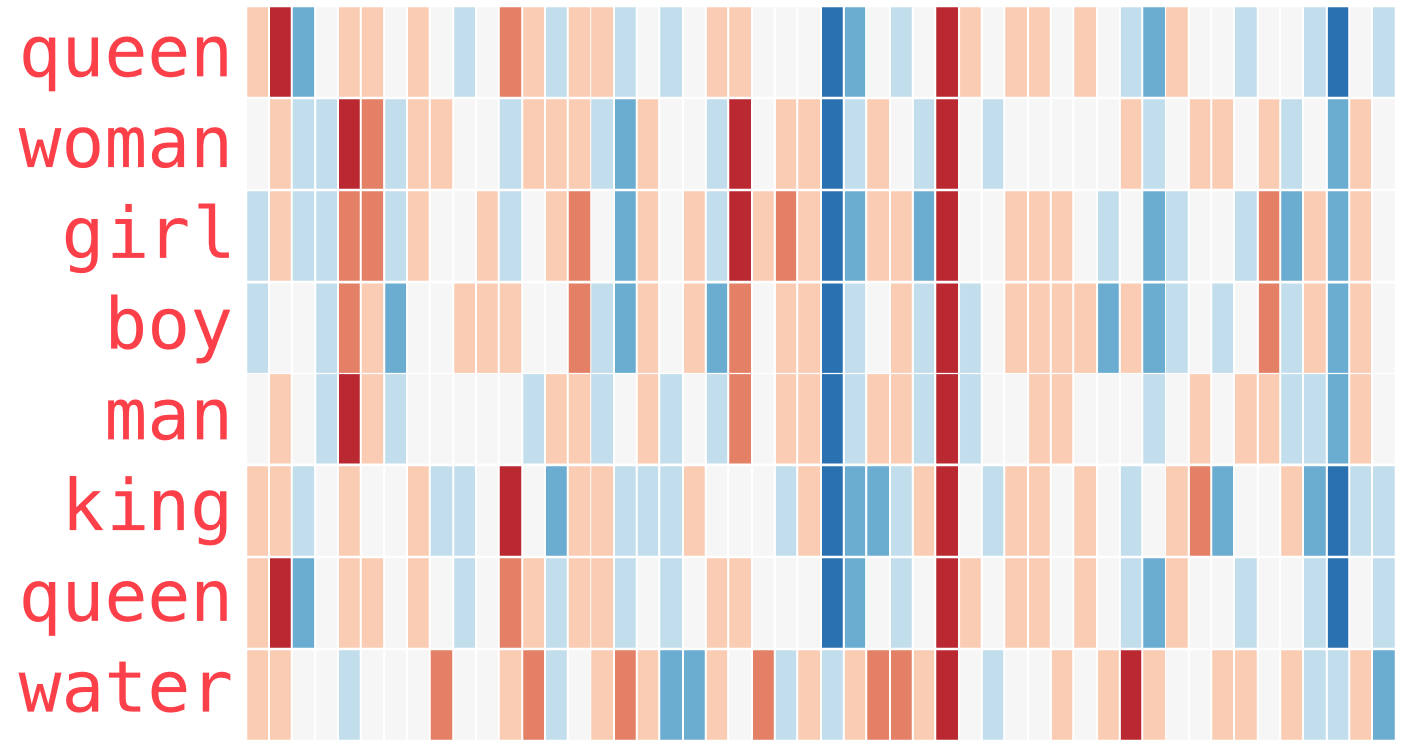

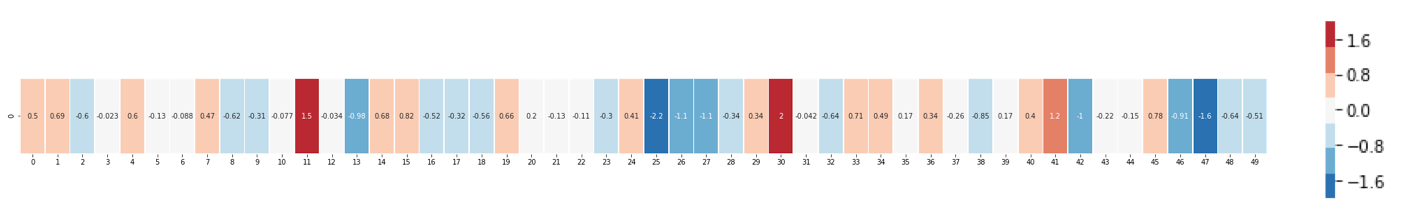

Quantification of text: Word2Vec (1/3)

Quantification of text: Word2Vec (2/3)

Quantification of text: Word2Vec (3/3)

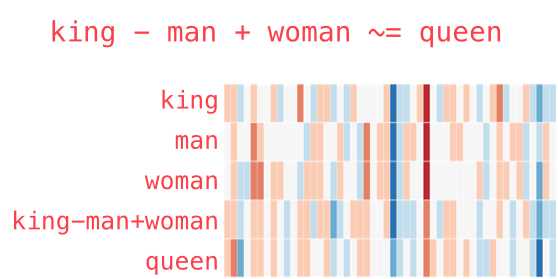

Figure 6: Jay Alammar - “The Illustrated Word2vec”

The resulting vector from “king-man+woman” doesn’t exactly equal “queen”, but “queen” is the closest word to it from the 400,000 word embeddings in this collection.

What we have seen is a quantification of text. Next, we will see how exactly we quantified the text (Hint: Not so deep learning).

P.S. We skipped a preceding approach: Bag of Words (BoW). That’s because BoW is counting words (more or less).

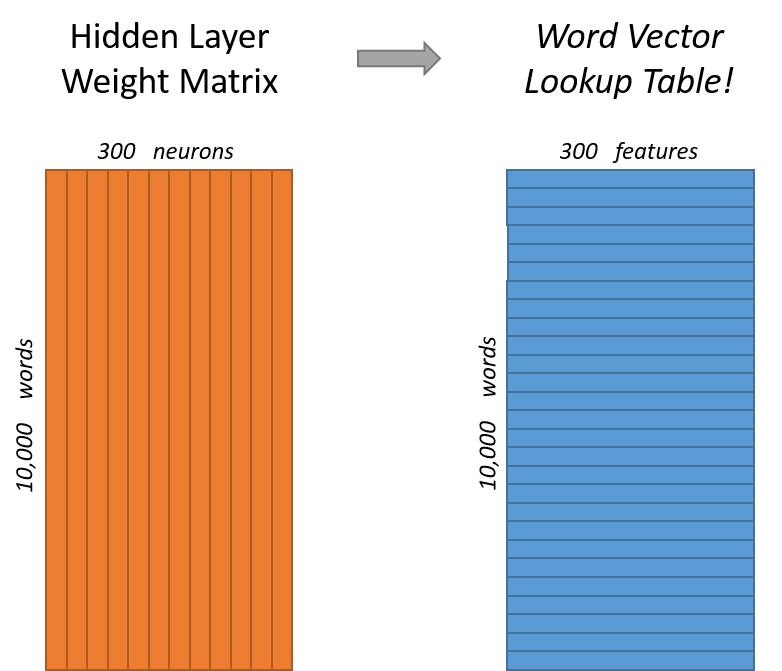

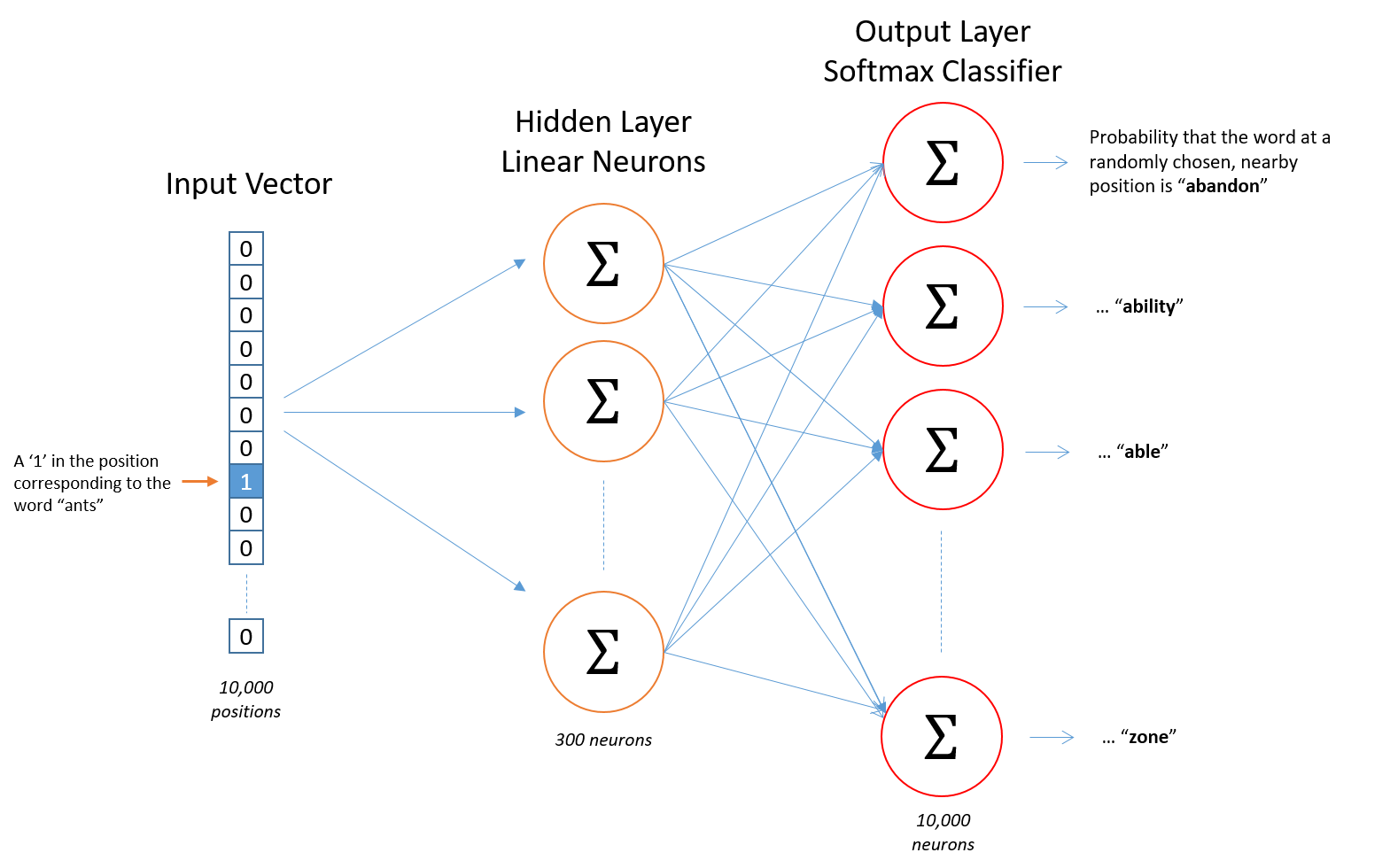

How did we quantify the text? (1/2)

Figure 7: Chris McCormick - “Word2Vec Tutorial - The Skip-Gram Model”

How did we quantify the text? (2/2)

Figure 8: Chris McCormick - “Word2Vec Tutorial - The Skip-Gram Model”

So far so good, but word embeddings are static, while human language is quite dynamic (“LLMs” are called “AI” these days!).

Let’s do something about that.

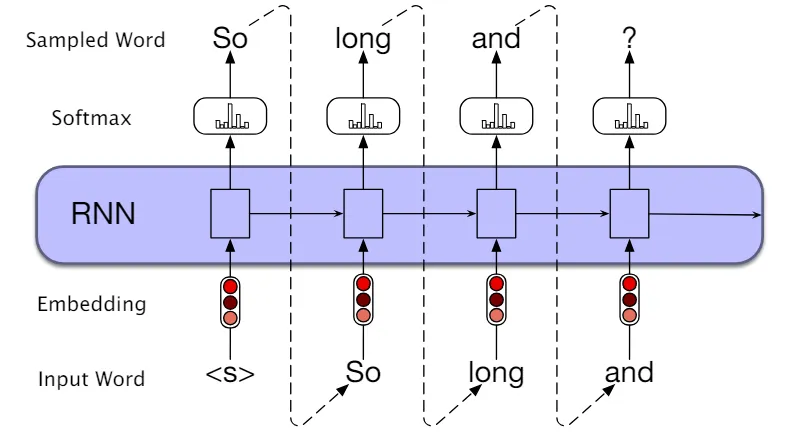

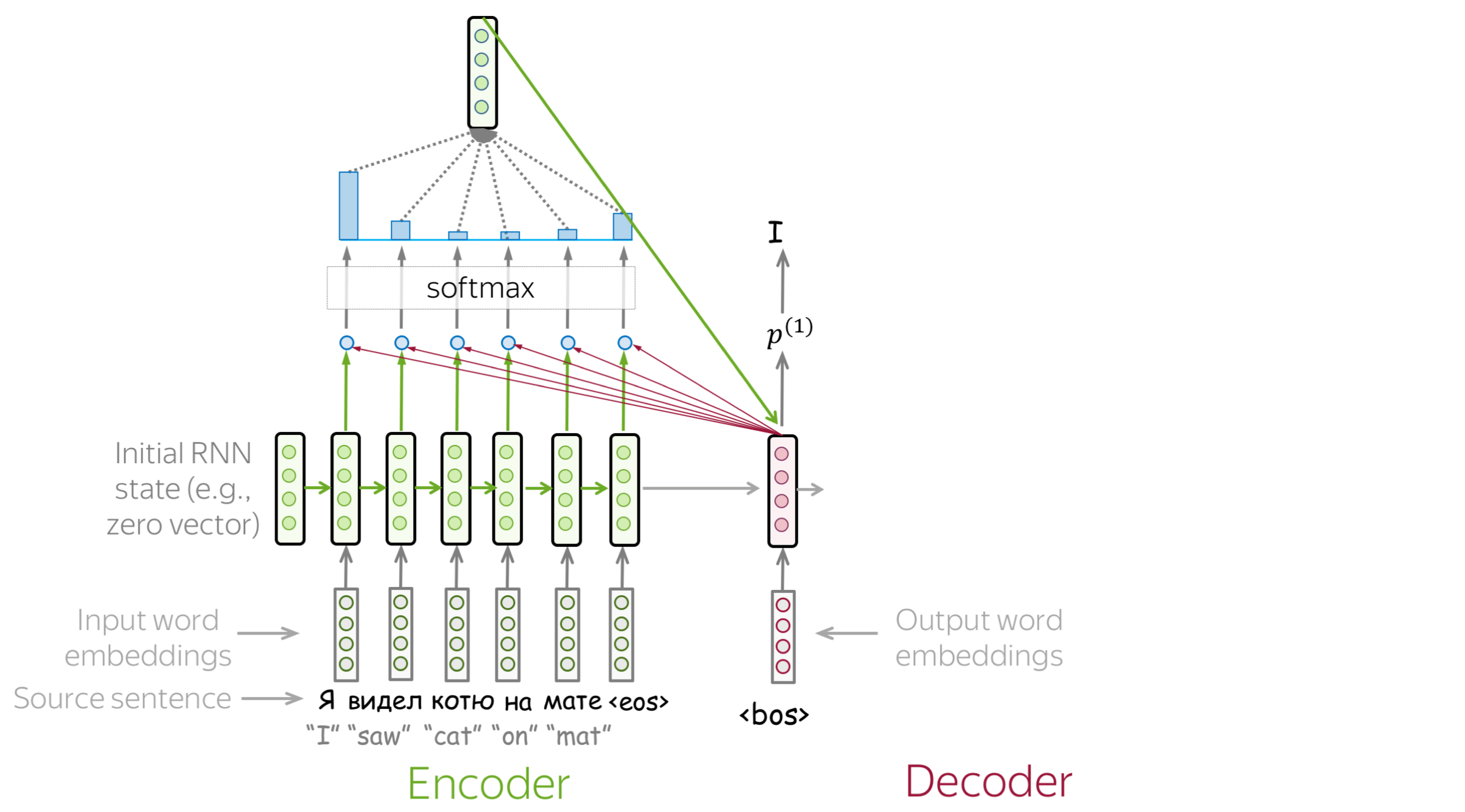

Using embeddings in RNNs (1/2)

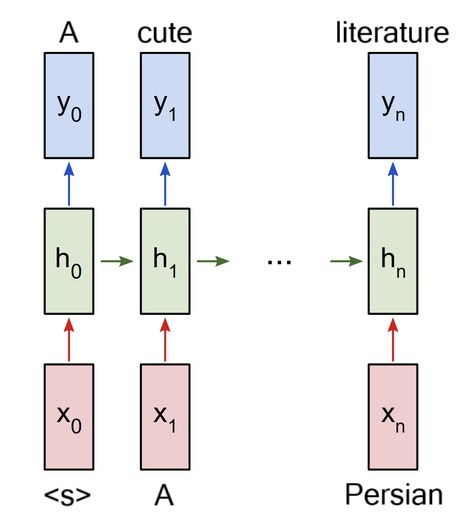

Word vectors (embeddings) are static representations of text.

Recurrent Neural Networks (RNNs) can process sequences of embeddings to dynamically capture the context of words.

Using embeddings in RNNs (2/2)

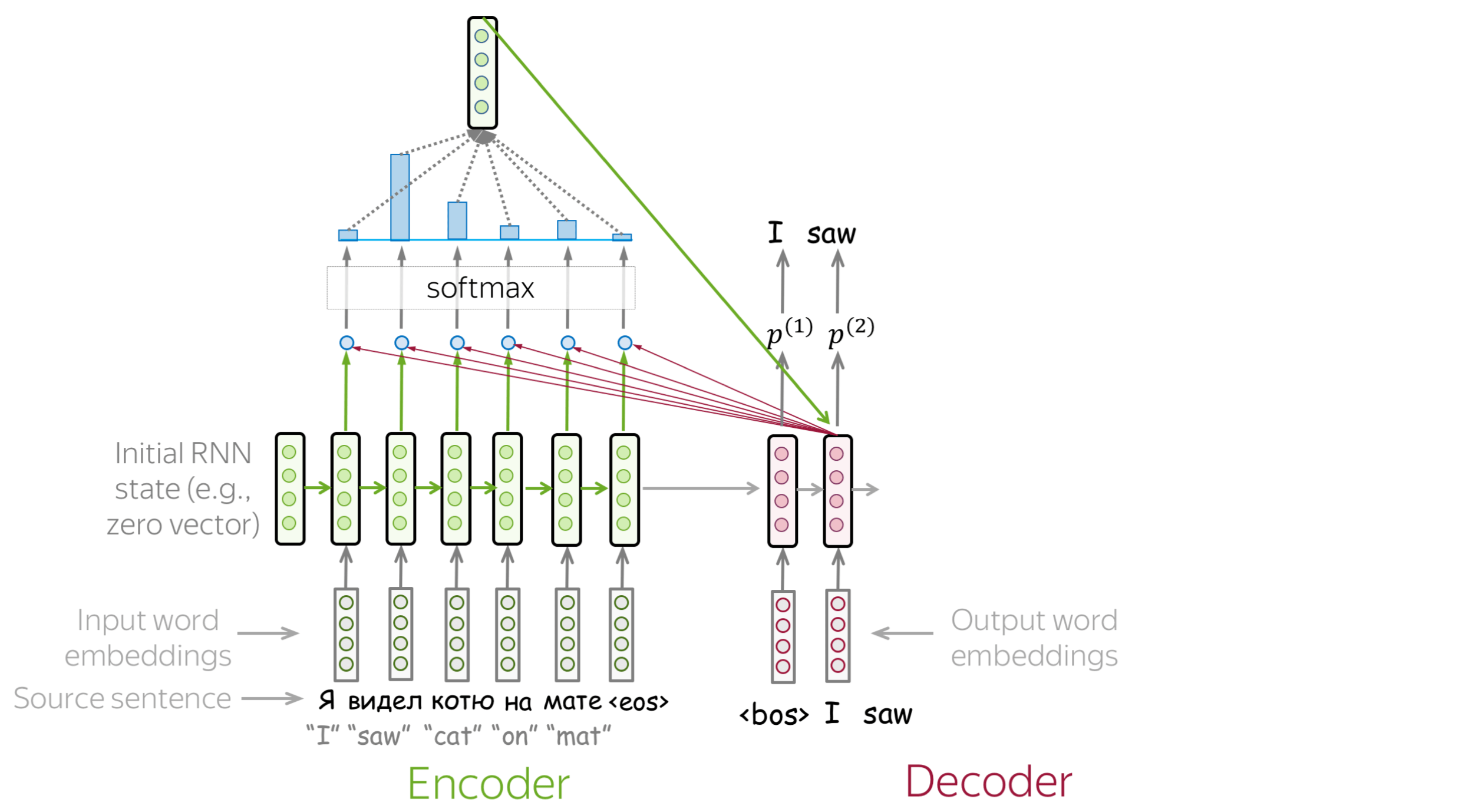

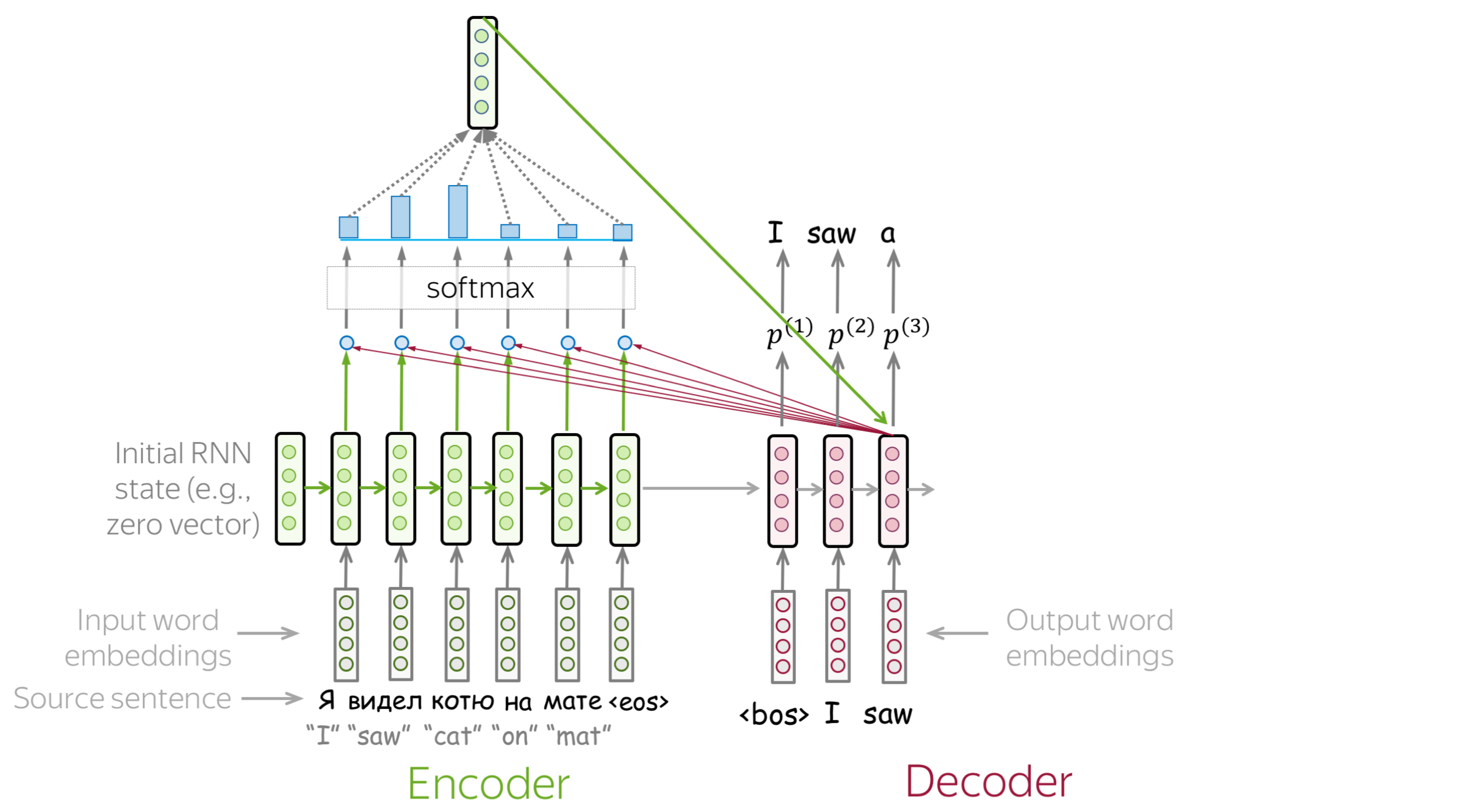

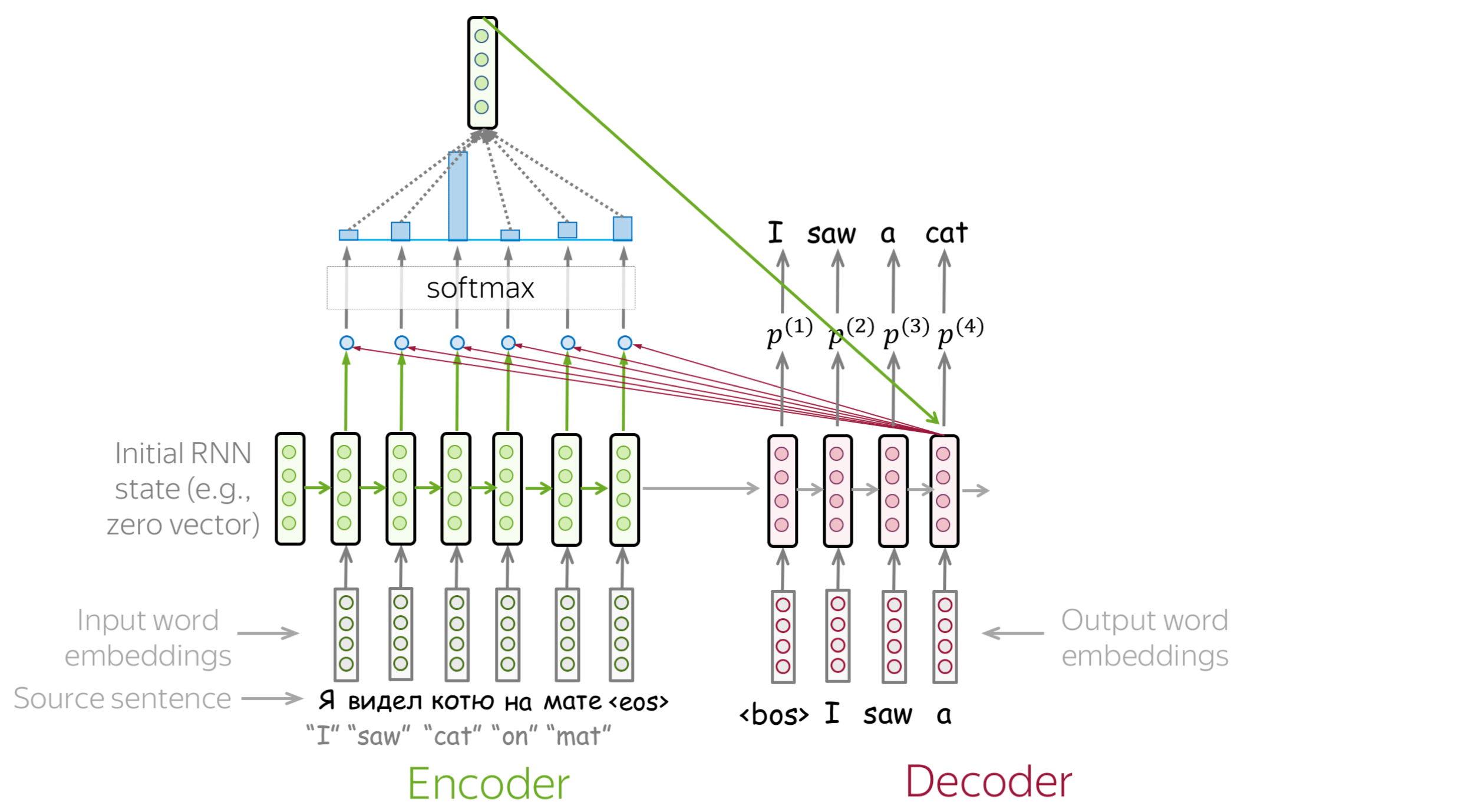

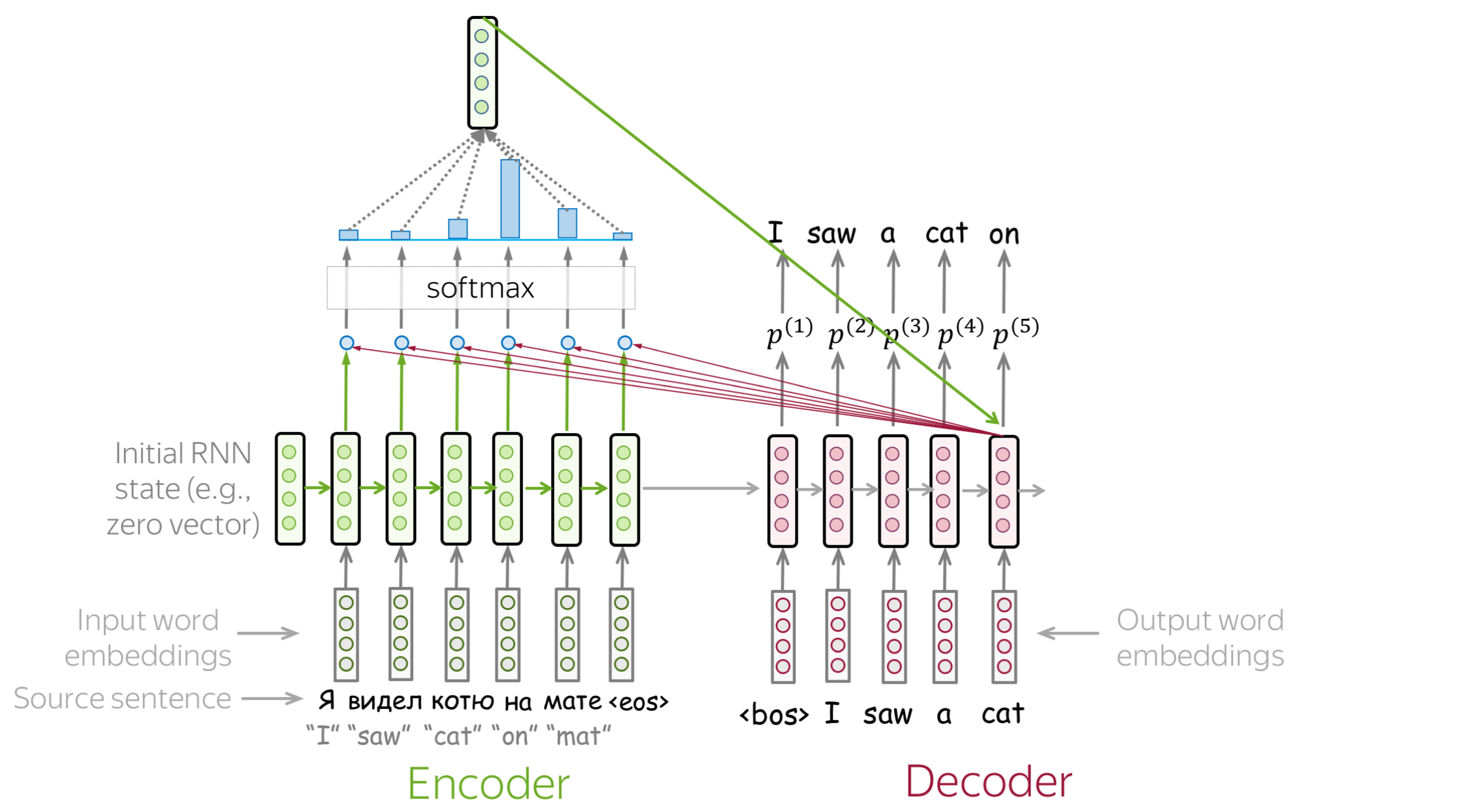

The attention mechanism was introduced (added to the RNN decoder) to help the model focus on the relevant parts of text.

We used RNNs to dynamically process word embeddings, updating the context of the words, and paying attention.

Why is attention so important? Let’s see an application.

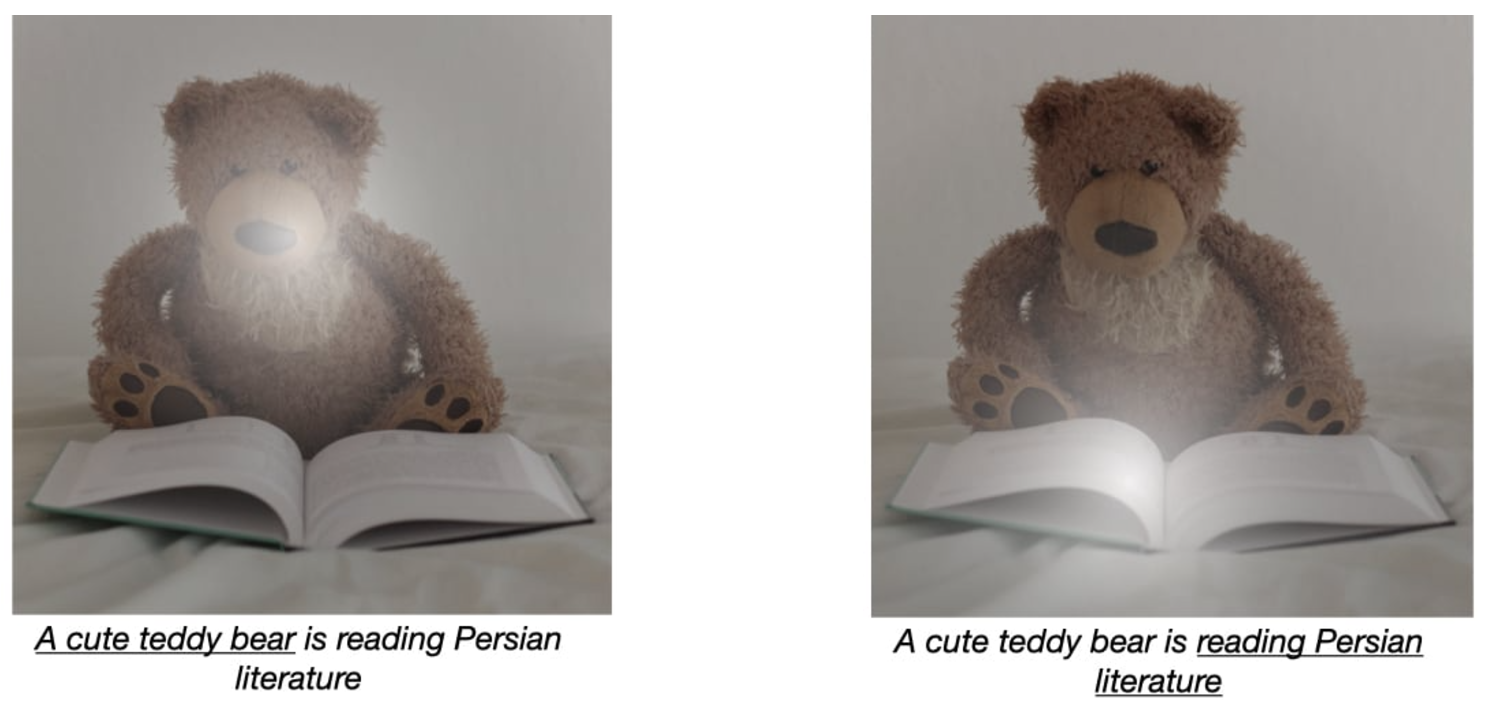

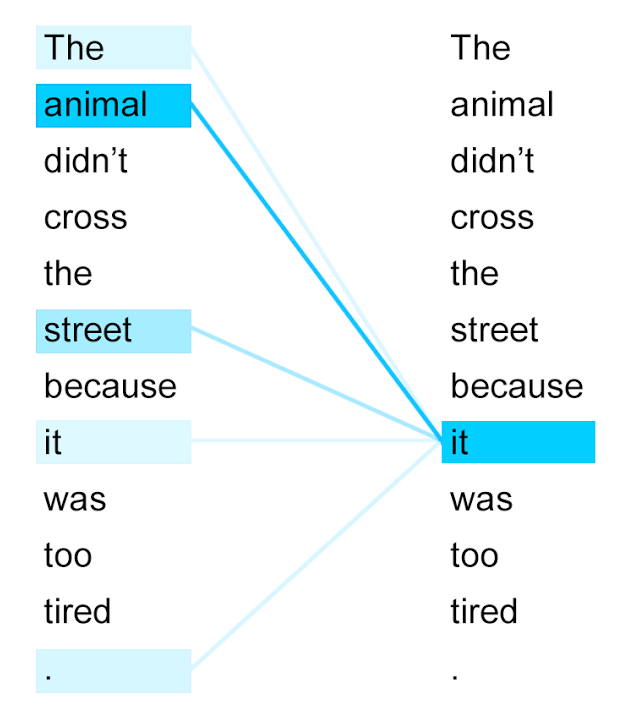

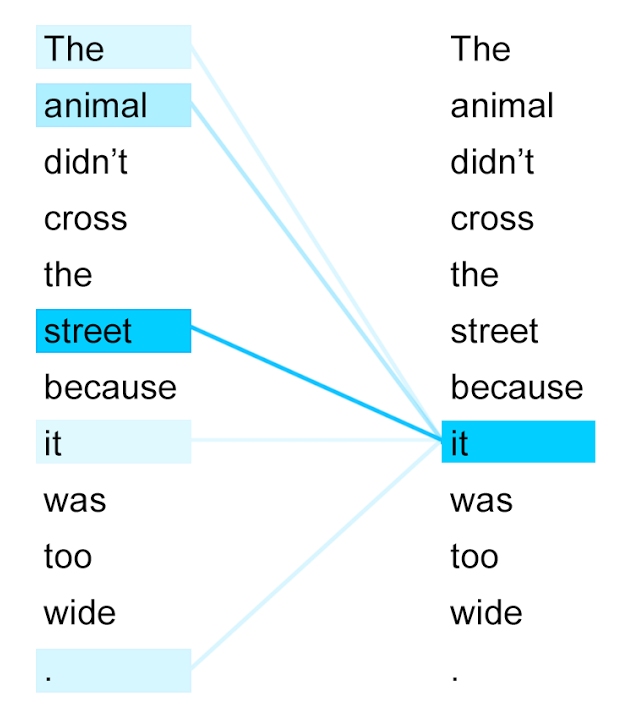

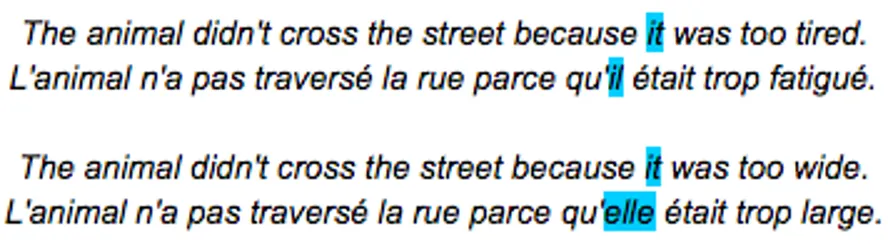

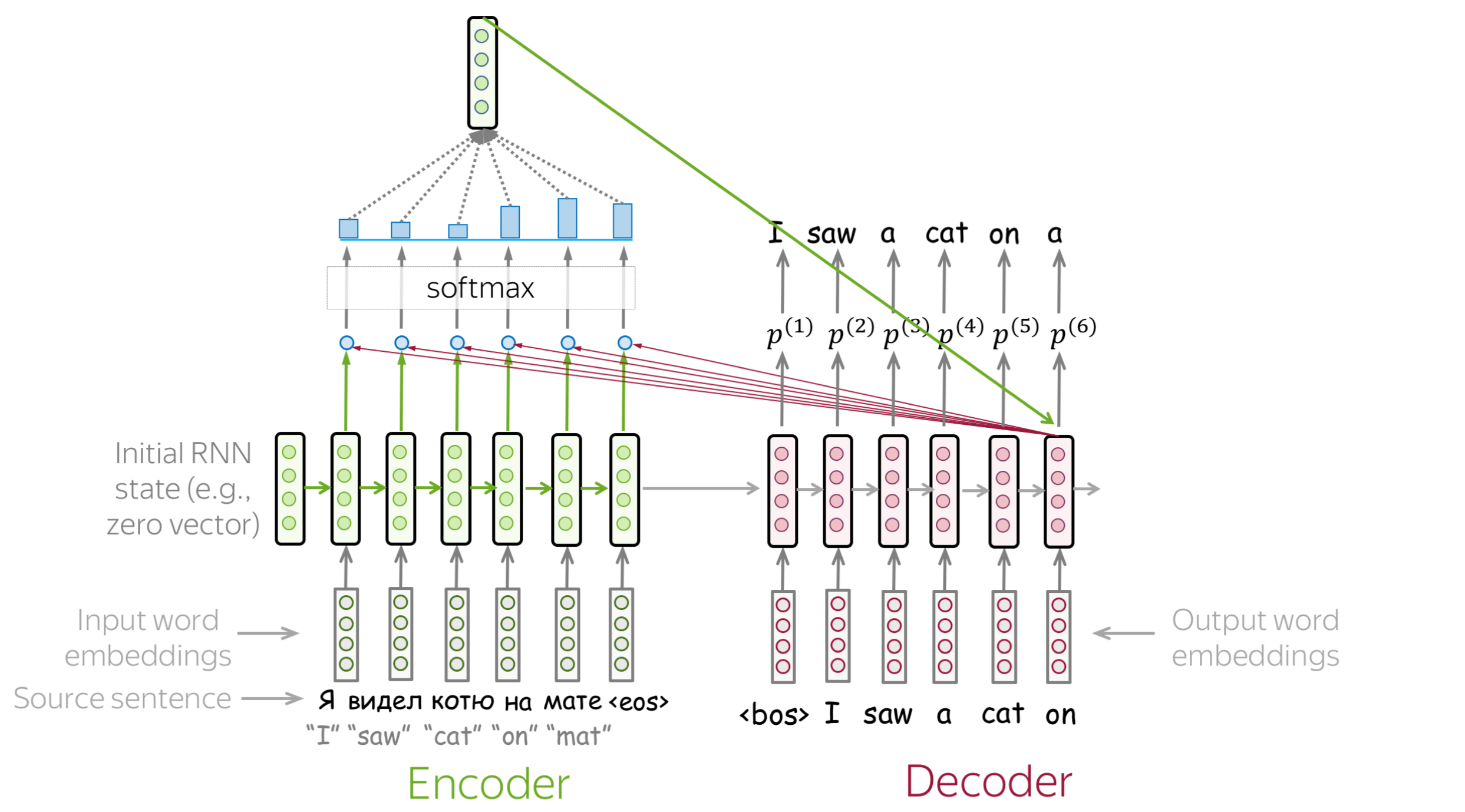

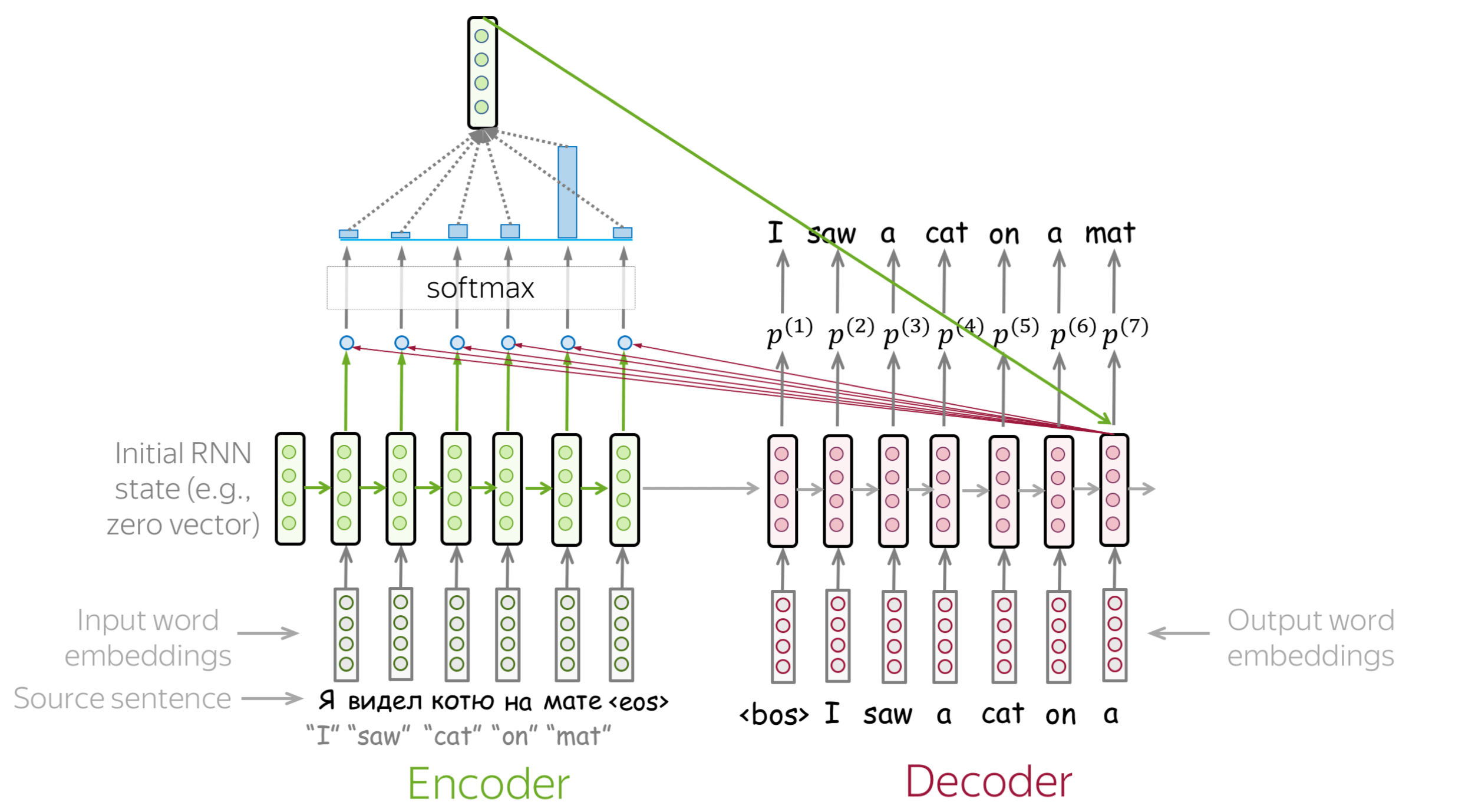

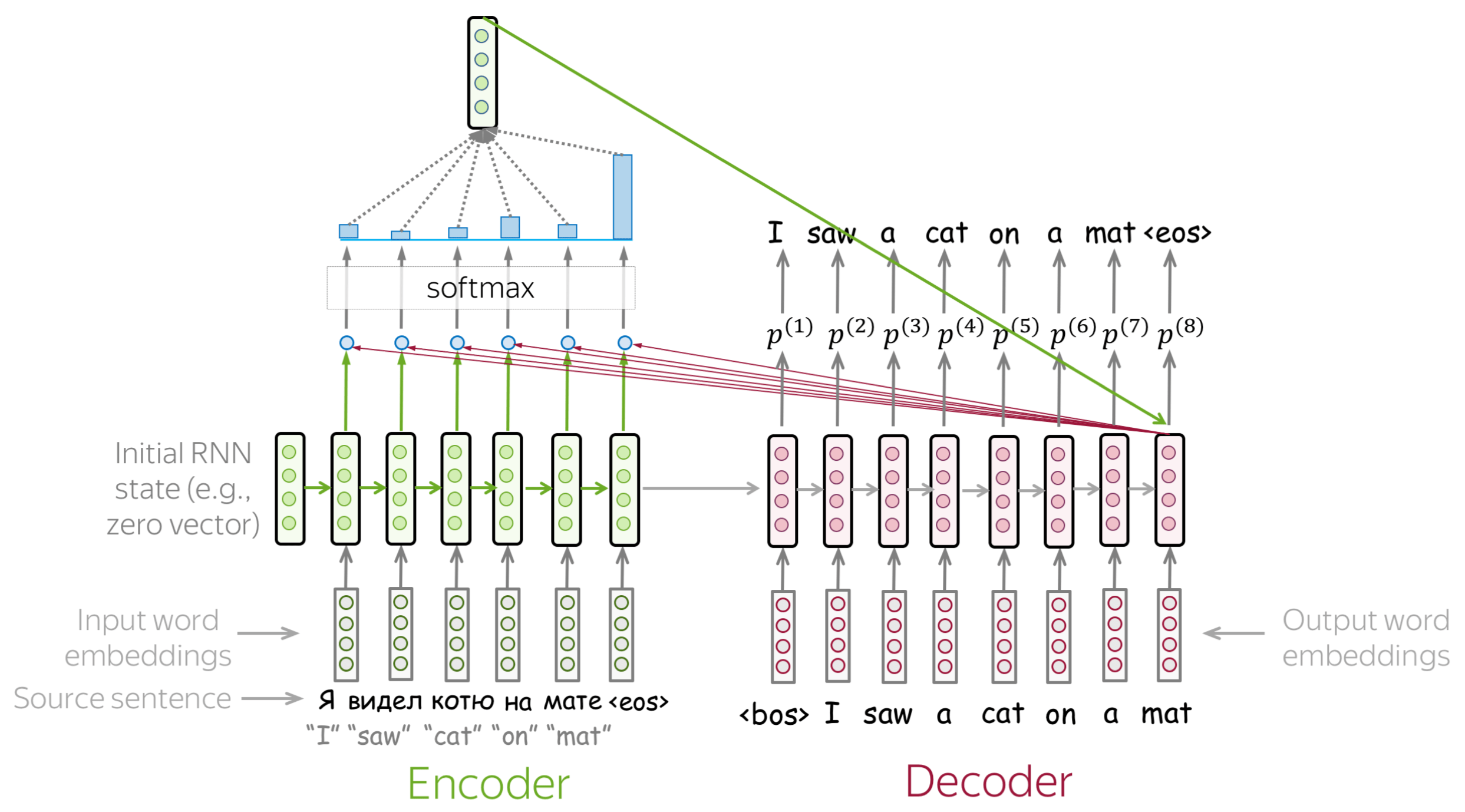

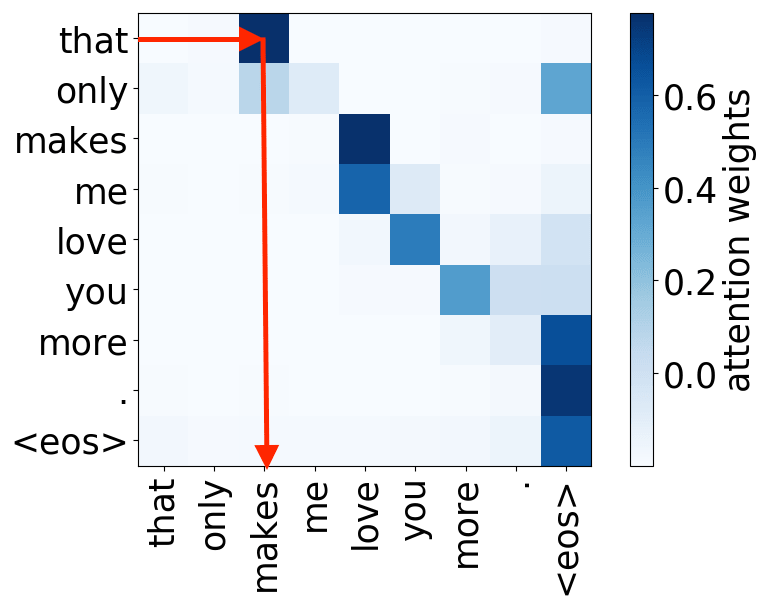

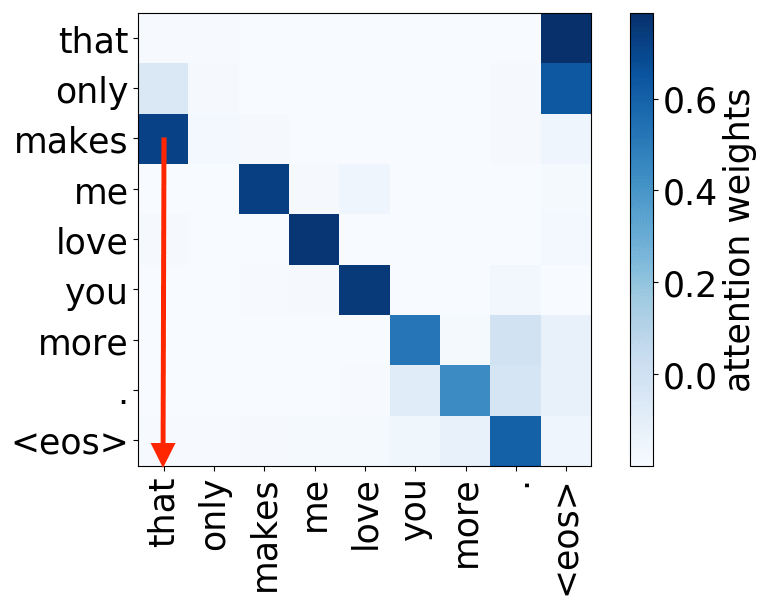

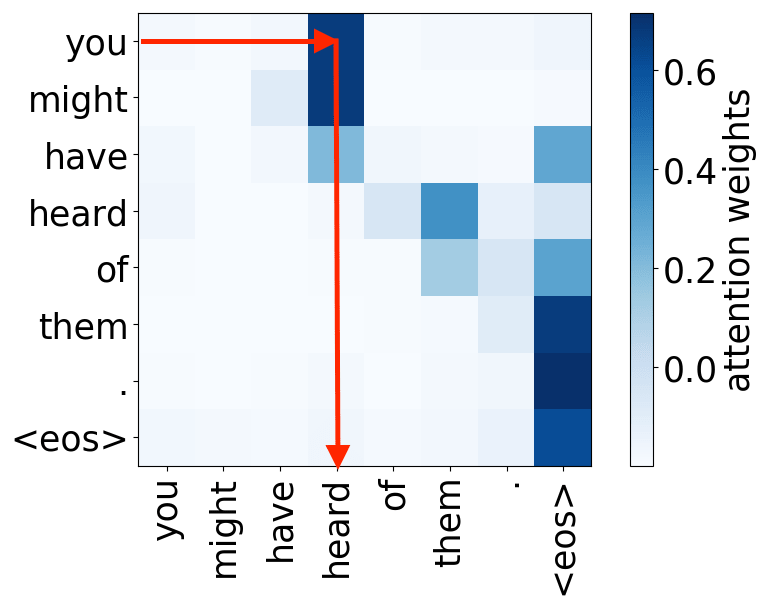

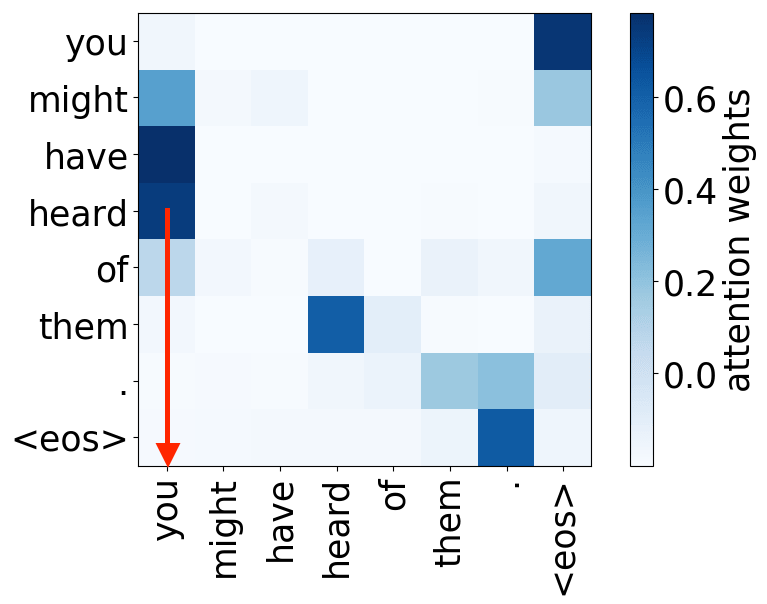

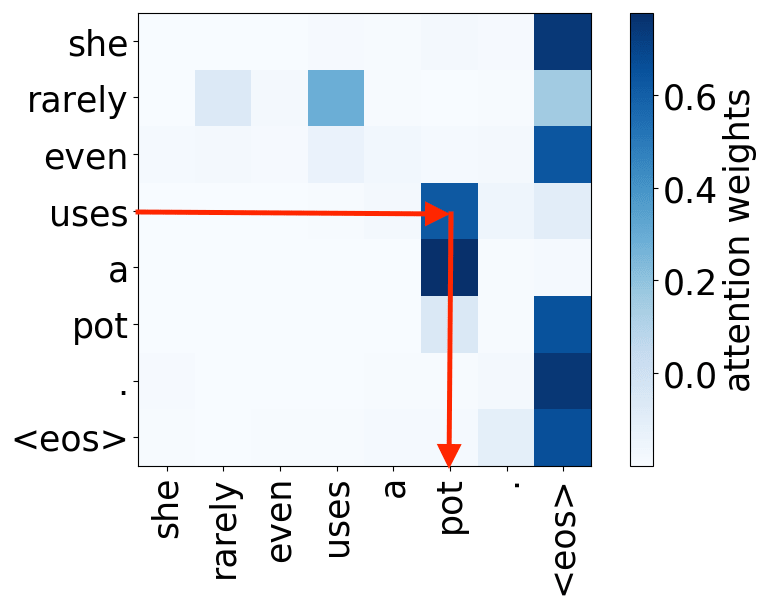

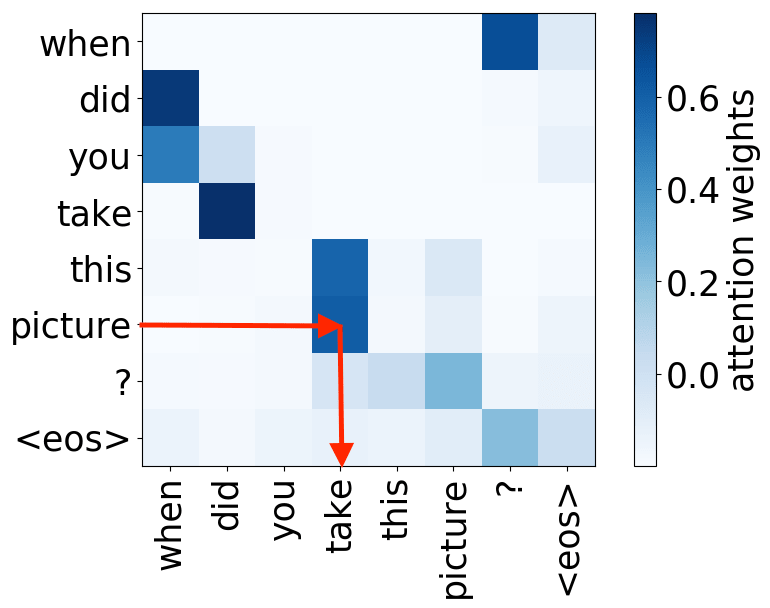

Adding attention to RNNs (1/2)

Adding attention to RNNs (2/2)

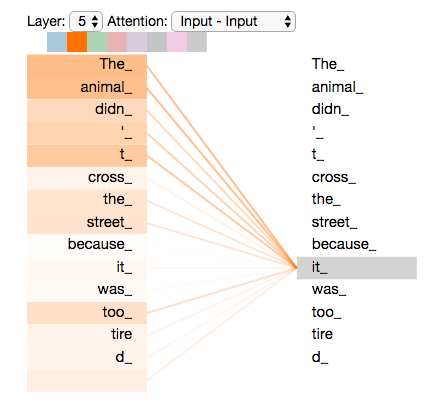

Attention addresses the fixed representation problem. At different steps, let a model “focus” on different parts of the input. An attention mechanism is a part of a neural network. At each decoder step, it decides which source parts are more important.

In the preceding example of language translation using RNNs with attention, we have a little problem. Any idea what it is?

The Transformer comes to the rescue…

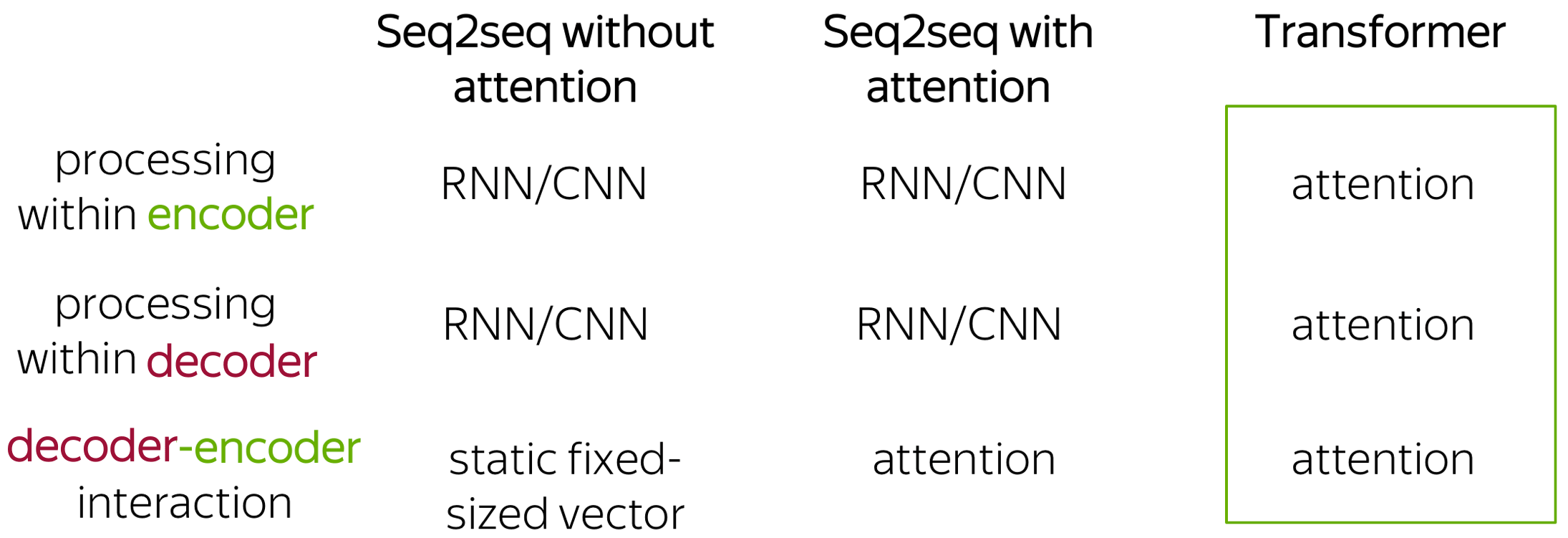

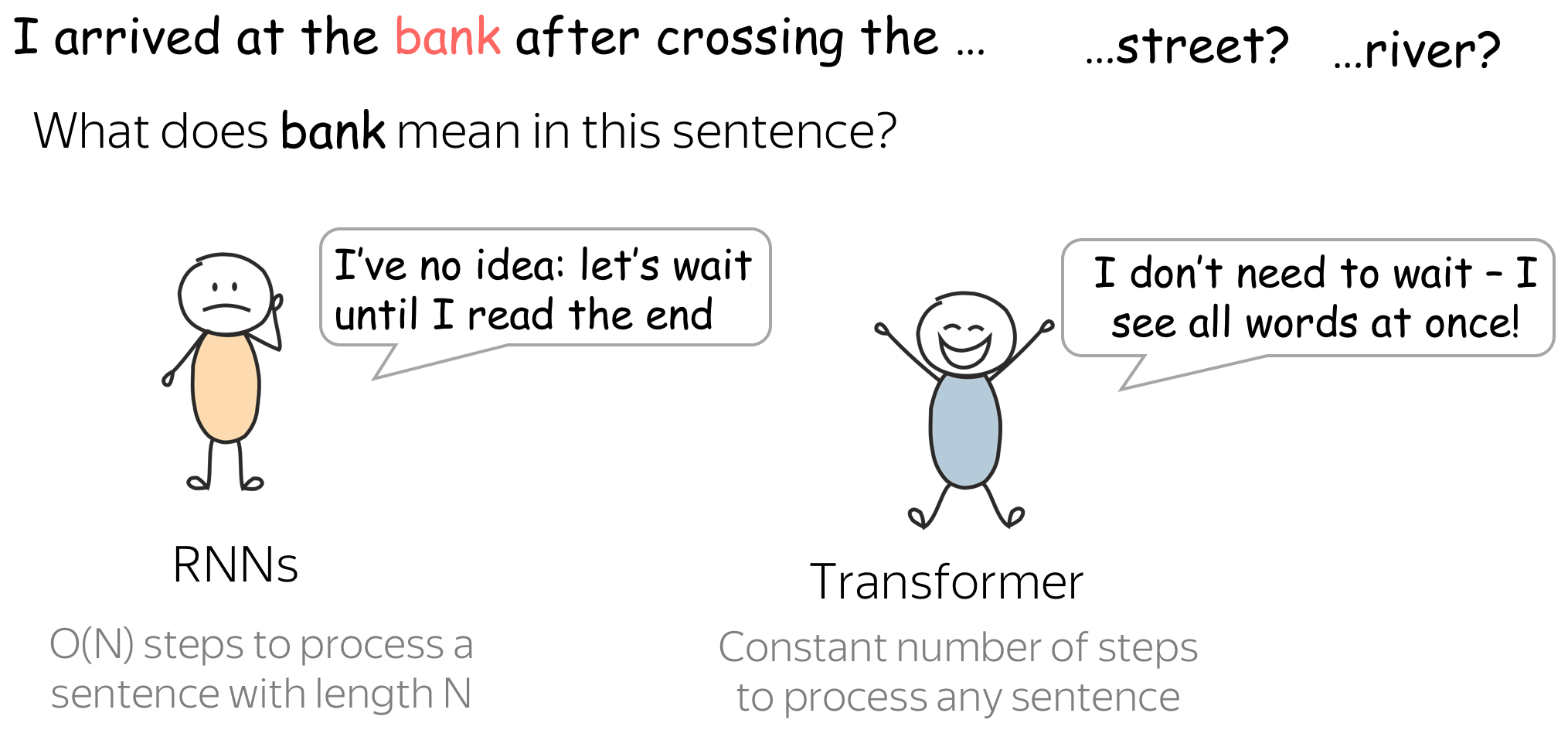

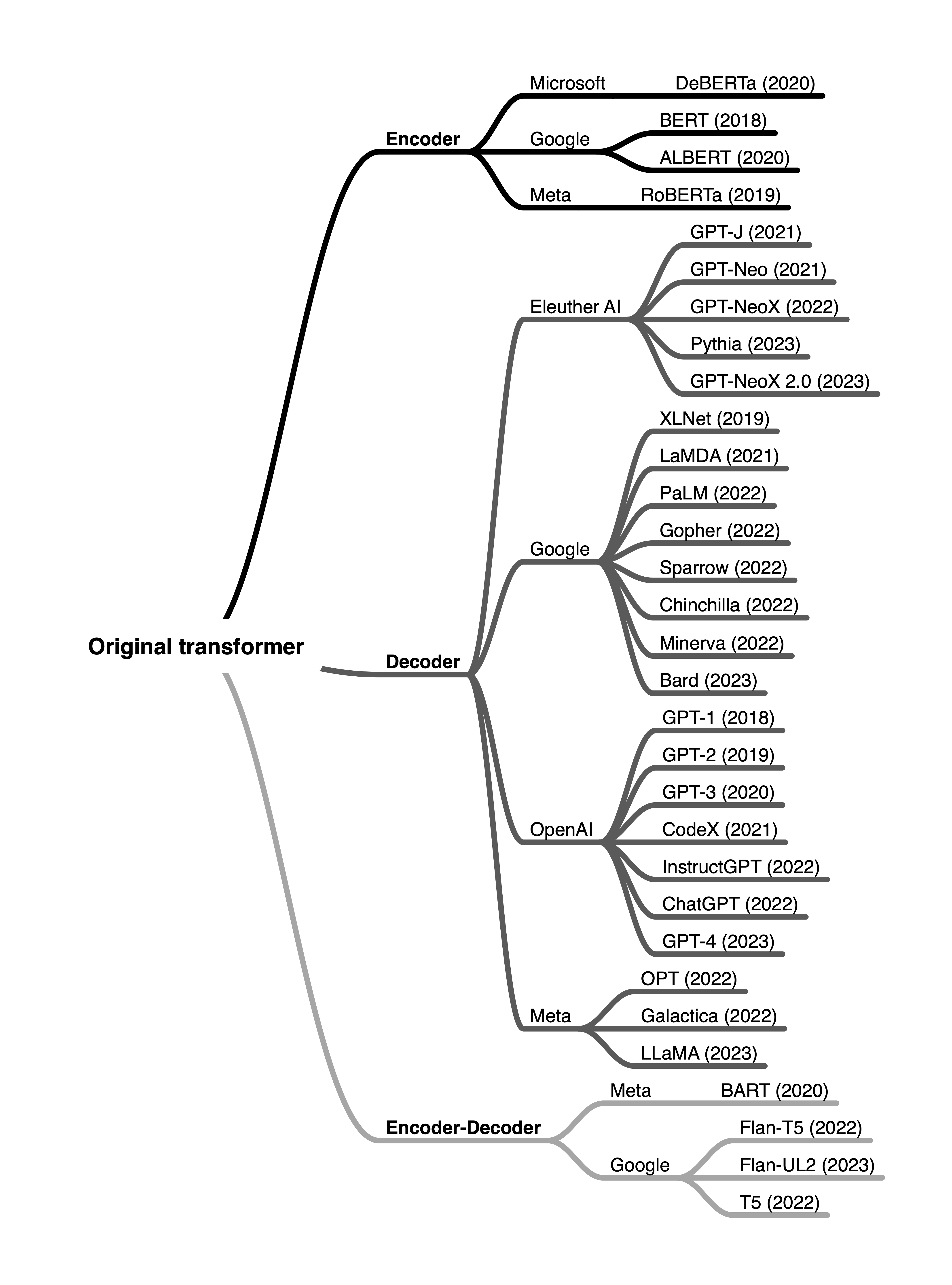

II. SMALL PICTURE: FROM RNNs TO TRANSFORMERS

Move past RNNs (they’re so 2010s!)

- The Transformer model was introduced to address the limitations of RNNs (computational and otherwise).

- It uses the self-attention mechanism to capture dependencies between words in a sentence.

- This is the basis of today’s LLMs such as GPT and Claude.

The Transformer model (1/3)

| RNNs | Transformers | |

|---|---|---|

| Processing | Sequential (O(N) complexity) | Parallel |

| Dependencies | Struggles with long-term (vanishing gradient problem) |

Excels at long-range capture (self-attention mechanism) |

| Training | Limited parallelization | Highly parallelizable |

| Scalability | Difficult to scale | Highly scalable |

| Resource need | Less memory intensive but demanding computation for long sequences | Higher memory usage but more efficient computation |

| Application | Effective to process shorter sequences for specific tasks, and real-time processing | Better for complex NLP tasks (translation, comprehension) |

The Transformer model (2/3)

The Transformer model (3/3)

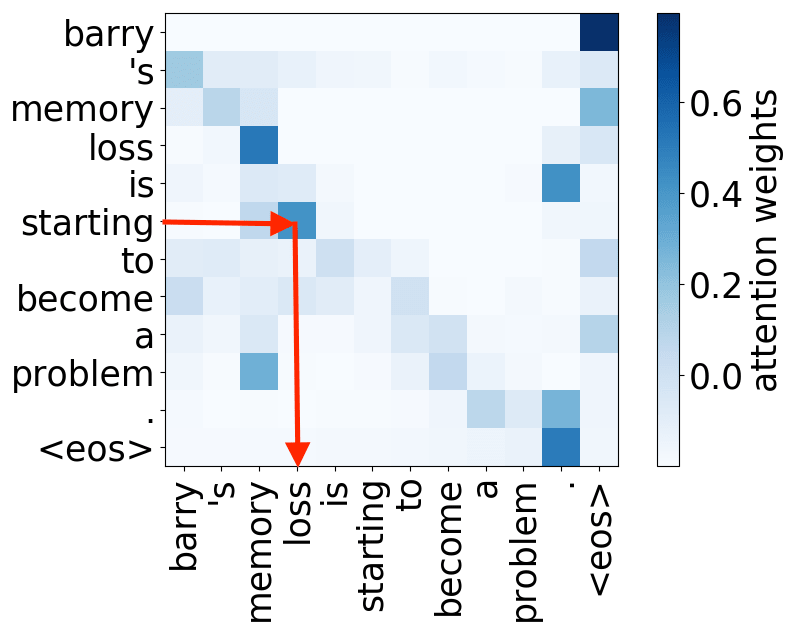

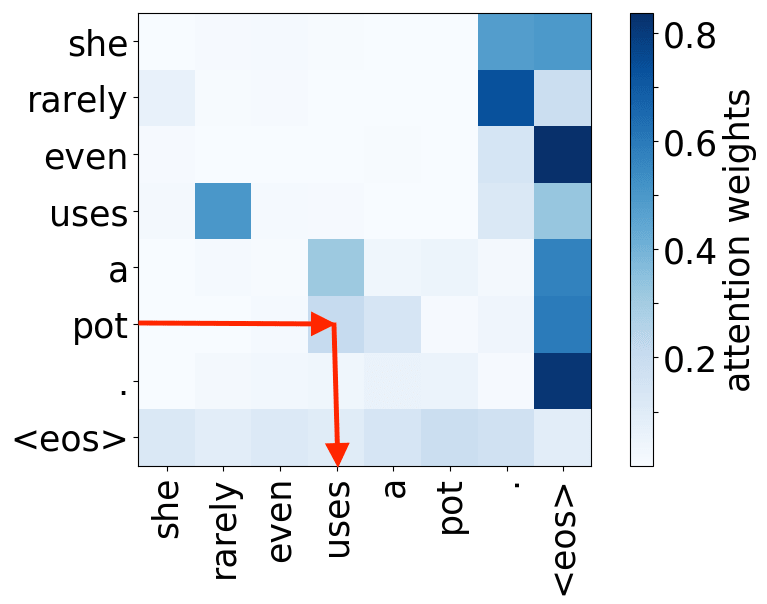

From self-attention to multi-head attention…

Syntactic heads: One of many heads

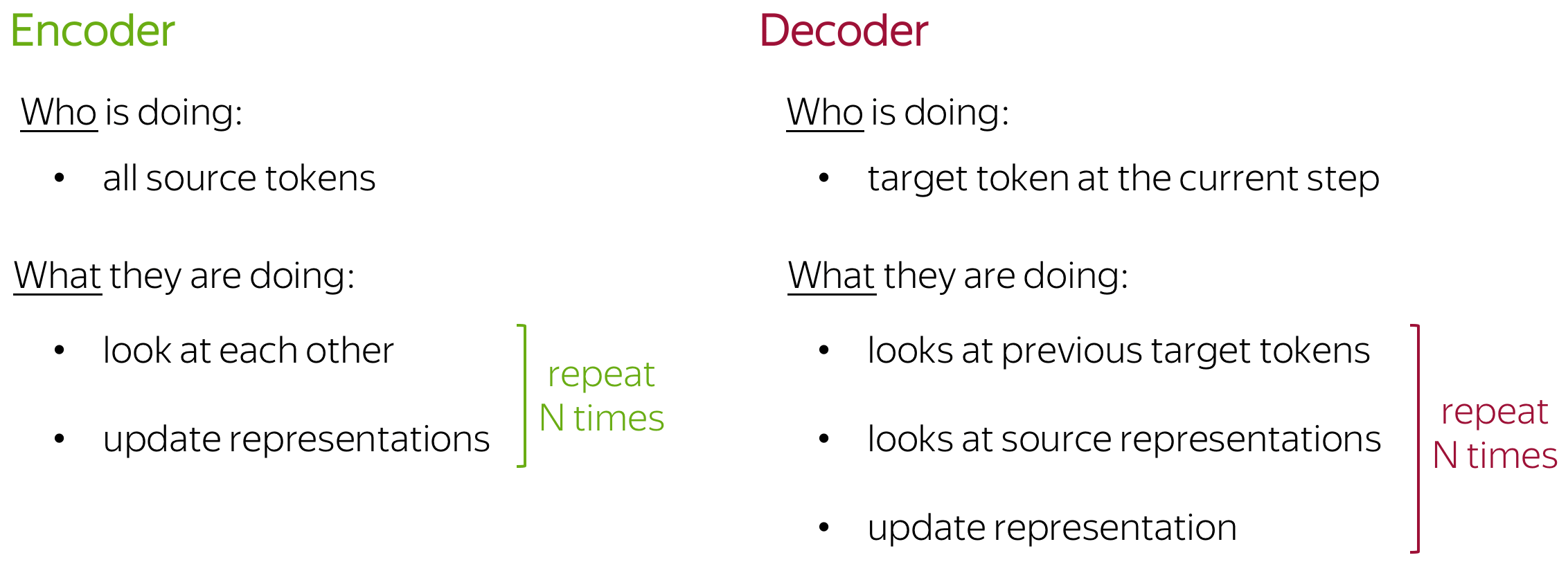

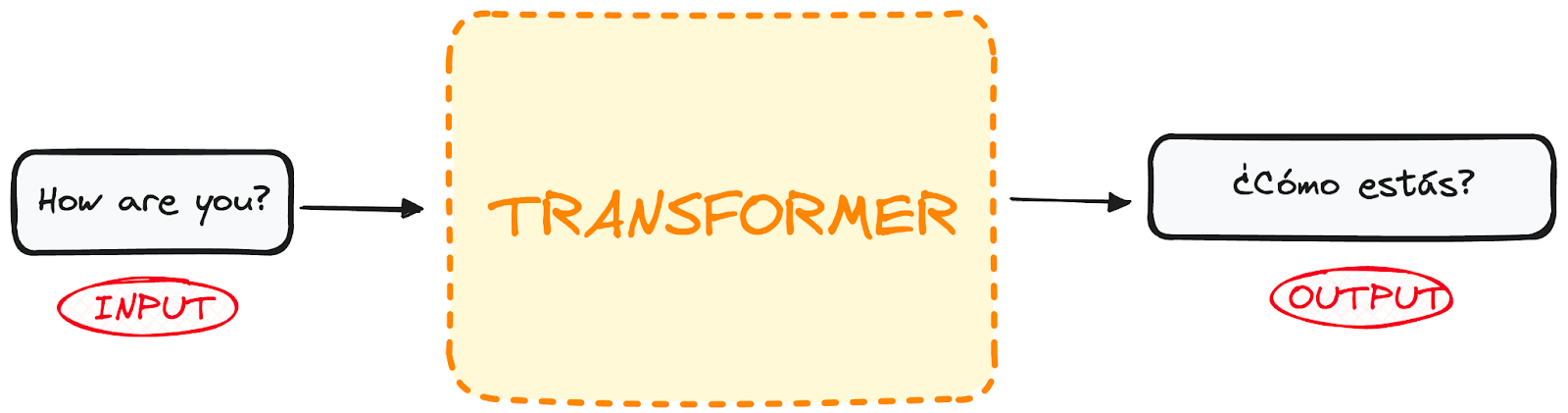

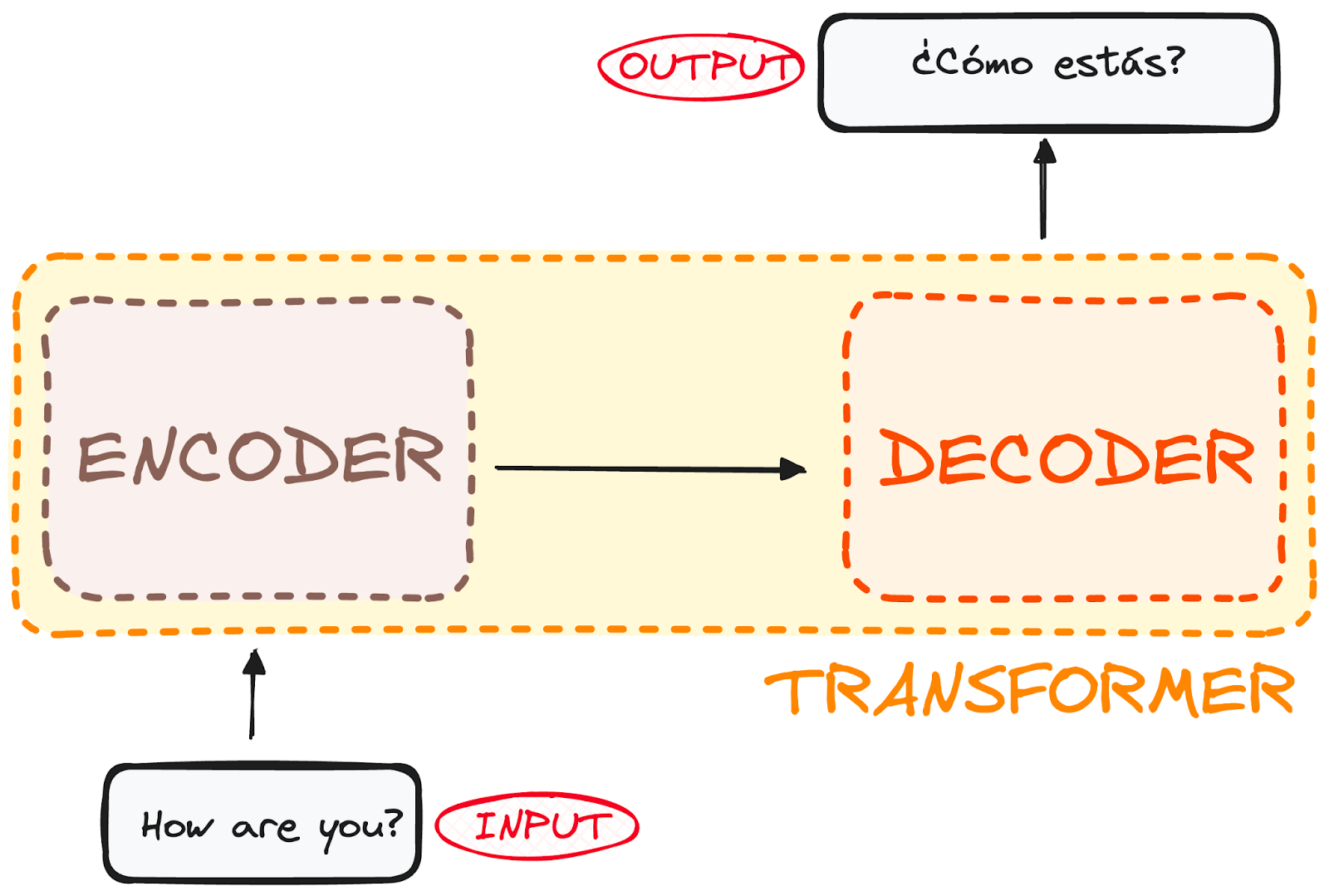

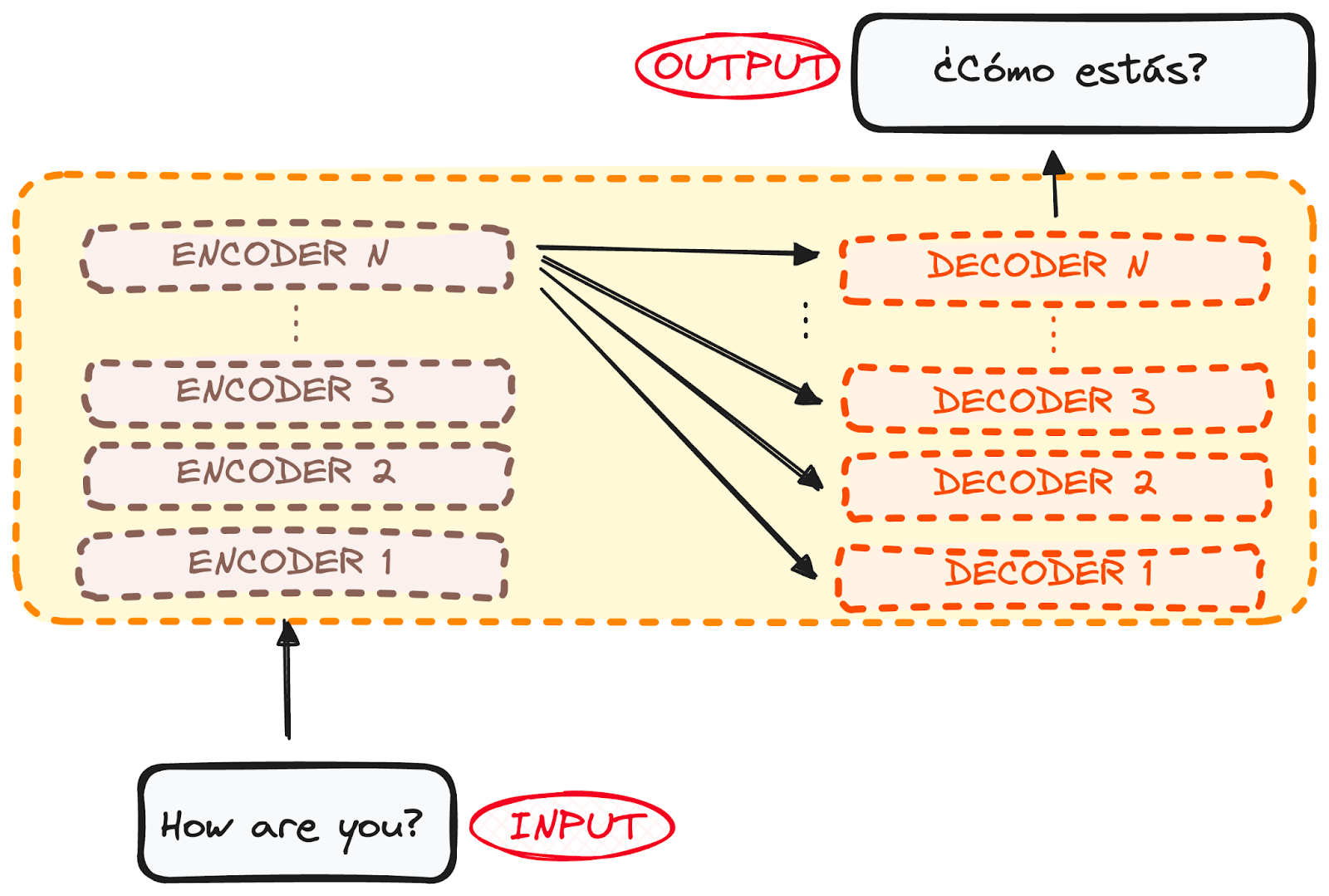

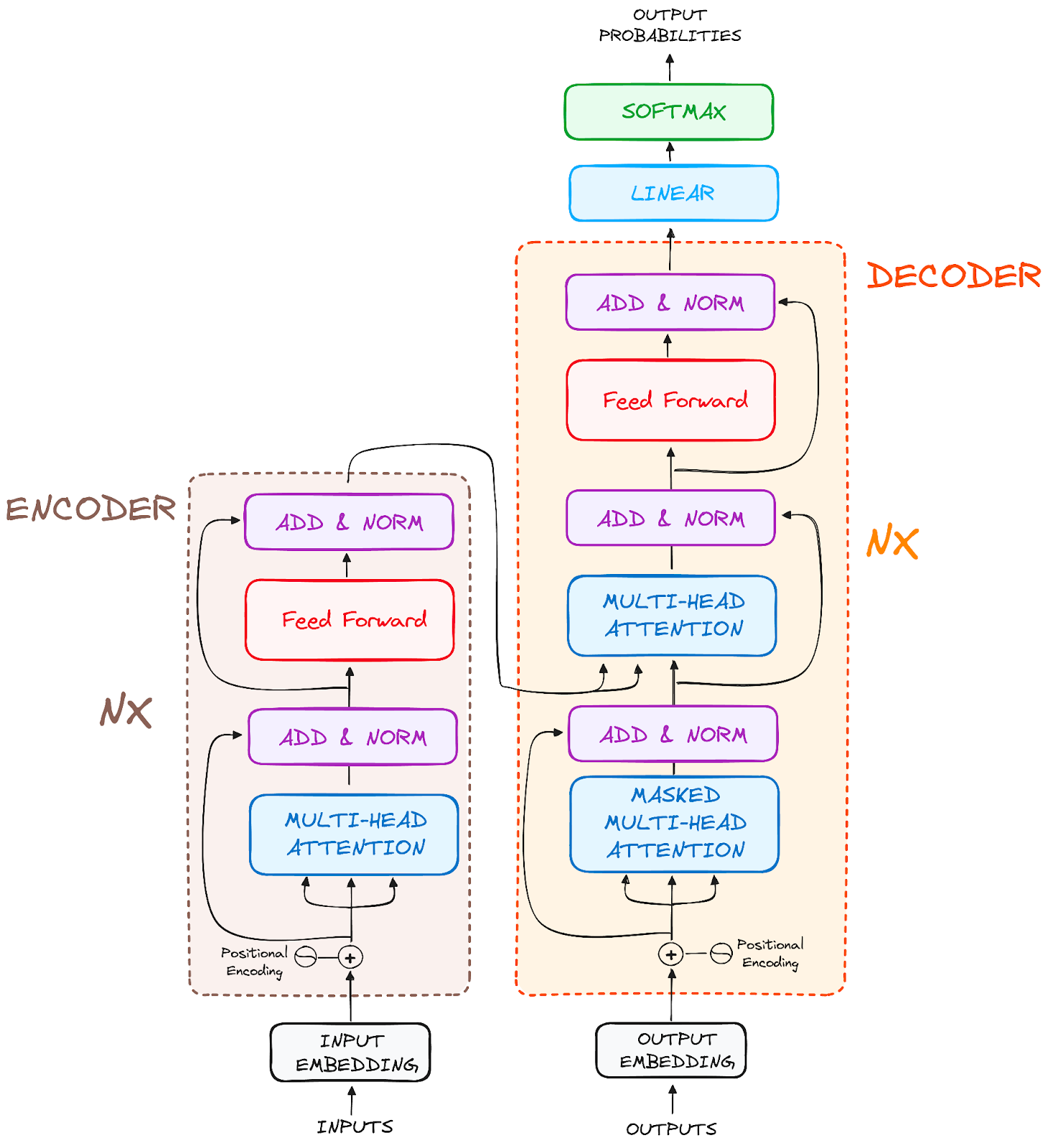

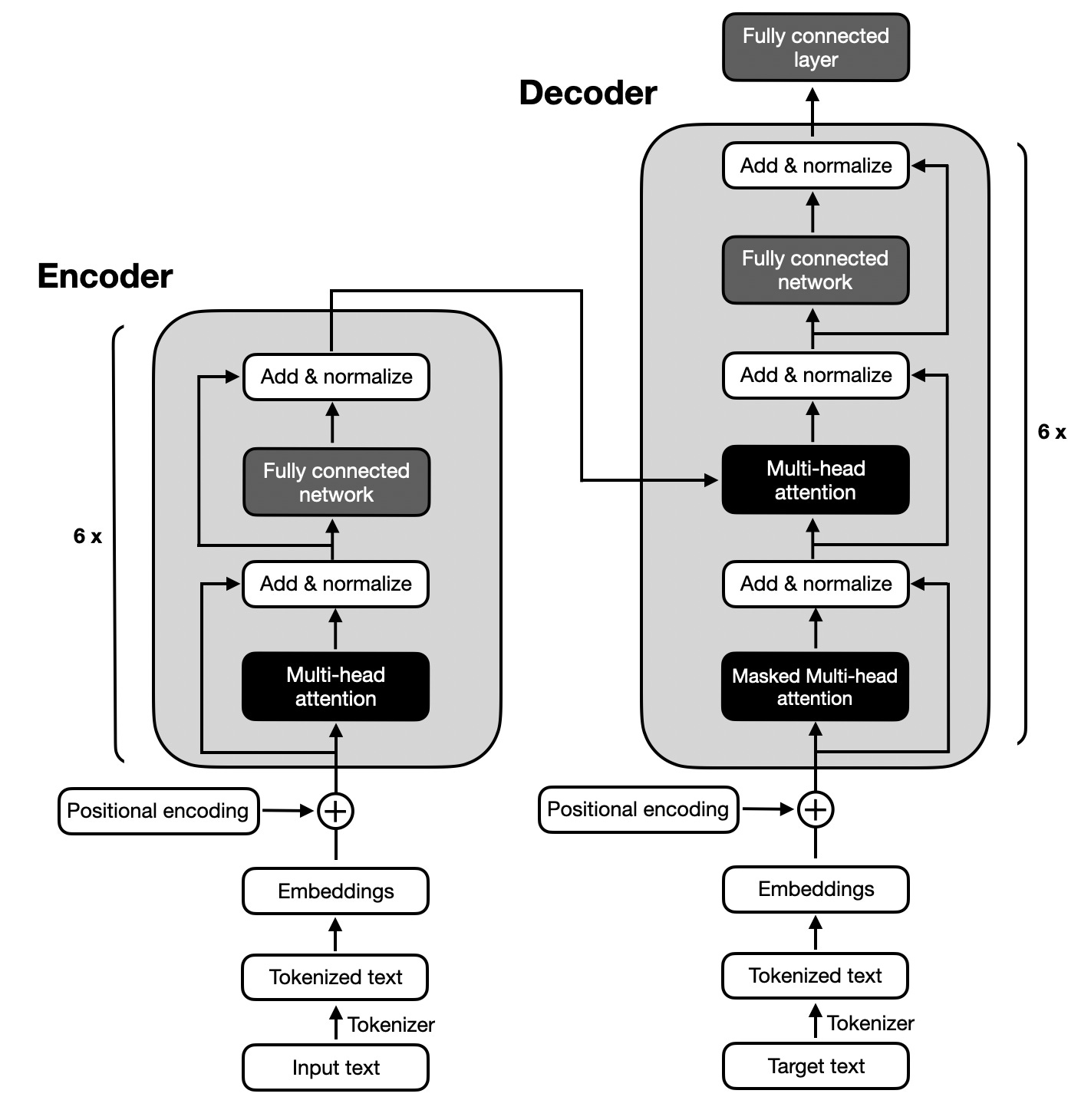

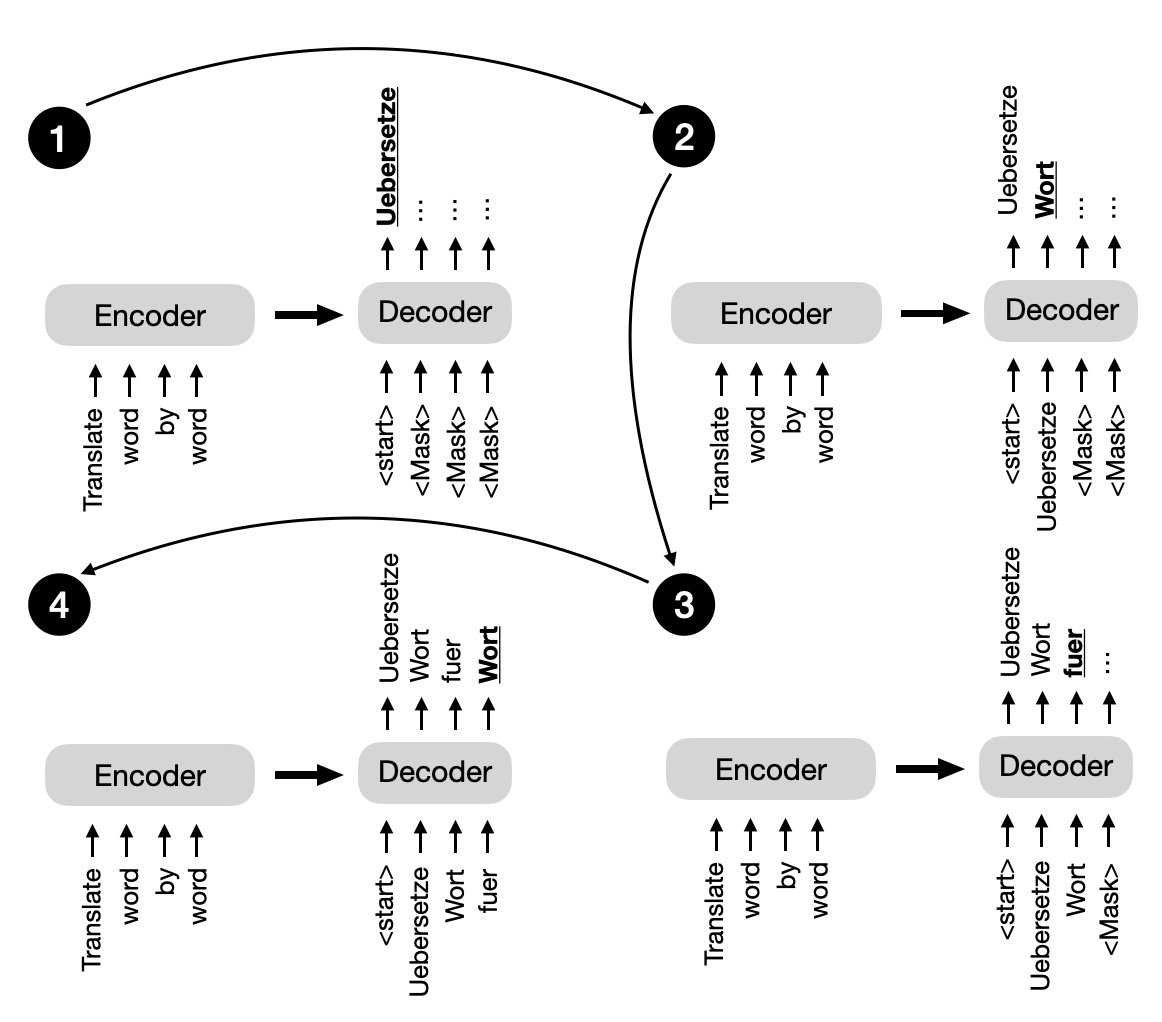

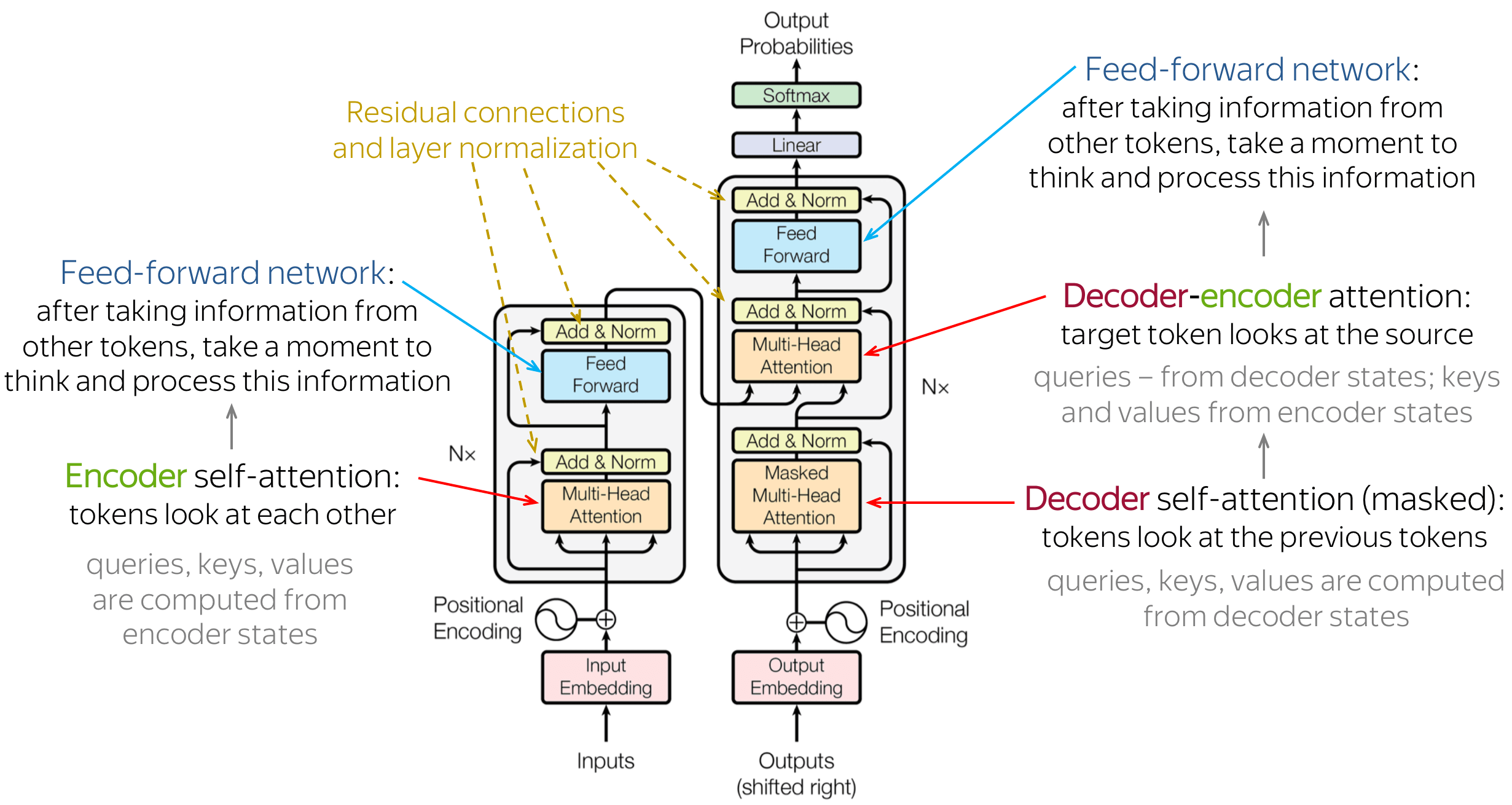

A confession. One of the simplifications in this deck is that we have not talked about the encoder-decoder pairs. They are essential in sequence-to-sequence tasks such as translation.

Let’s fix that and see the complete Transformer architecture.

So far omitted: Encoder-decoder pair

Figure 12: Educative, Inc. - “What are transformers?”

The Transformer Architecture

Figure 13: Lena Voita - “Sequence to Sequence (seq2seq) and Attention”

While a picture simplifies the Transformer, its true complexity lies in its operation. Google Research has a notebook for language translation to test the following transformer:

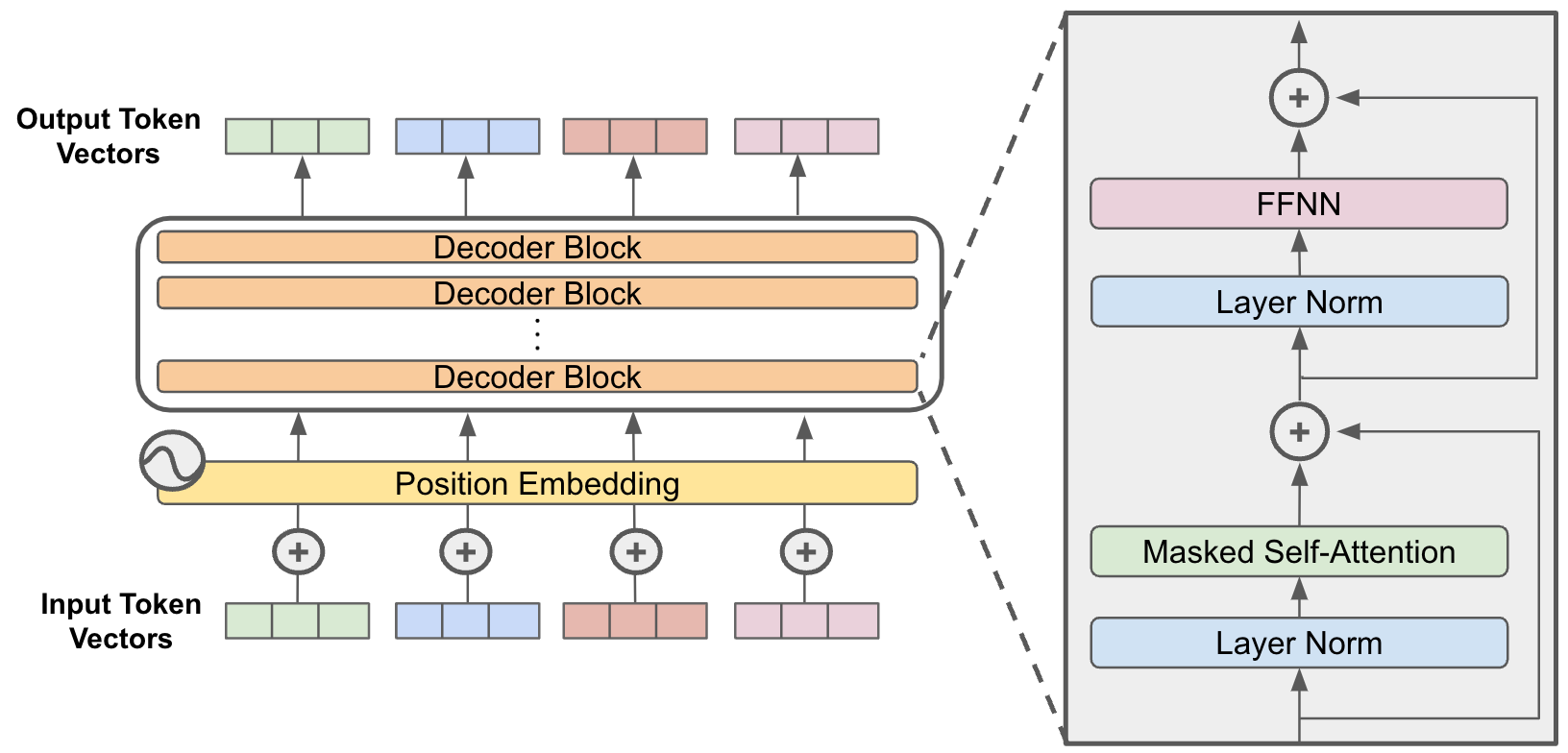

Next up: GPT - Generative Pre-trained Transformer

III. BIG PICTURE: THE TRANSFORMER EXPLAINED

The latest and greatest

Figure 14: Microsoft Research - “How Large Language Models work”

Transformer in action (1/2)

Figure 15: Jay Alammar - “How GPT3 Works - Visualizations and Animations”

Transformer in action (2/2)

Figure 16: Jay Alammar - “How GPT3 Works - Visualizations and Animations”

So far, this is how we’ve arrived at the Transformer:

\(BoW - BoW + Embeddings + RNN + Attention - RNN\) \(+ More Attention + Multiple Heads = Transformer\)

Let’s unpack the magic box now: the Transformer.

The Transformer

Parallel processing is key

Figure 17: Google Research - “Transformer: A Novel Neural Network Architecture for Language Understanding”

Back to the Transformer

Revisiting multi-head attention (1/2)

Syntactic heads: One of many heads!

Revisiting multi-head attention (2/2)

Why is attention so important?

In addition to Google Research’s language translation notebook, a more detailed visualization is now available here. Just brew a pot of coffee before you click - it’s comprehensive!

Before we continue, let’s delve into the inner workings of a small GPT-2 right here (based on nanoGPT) and understand it.

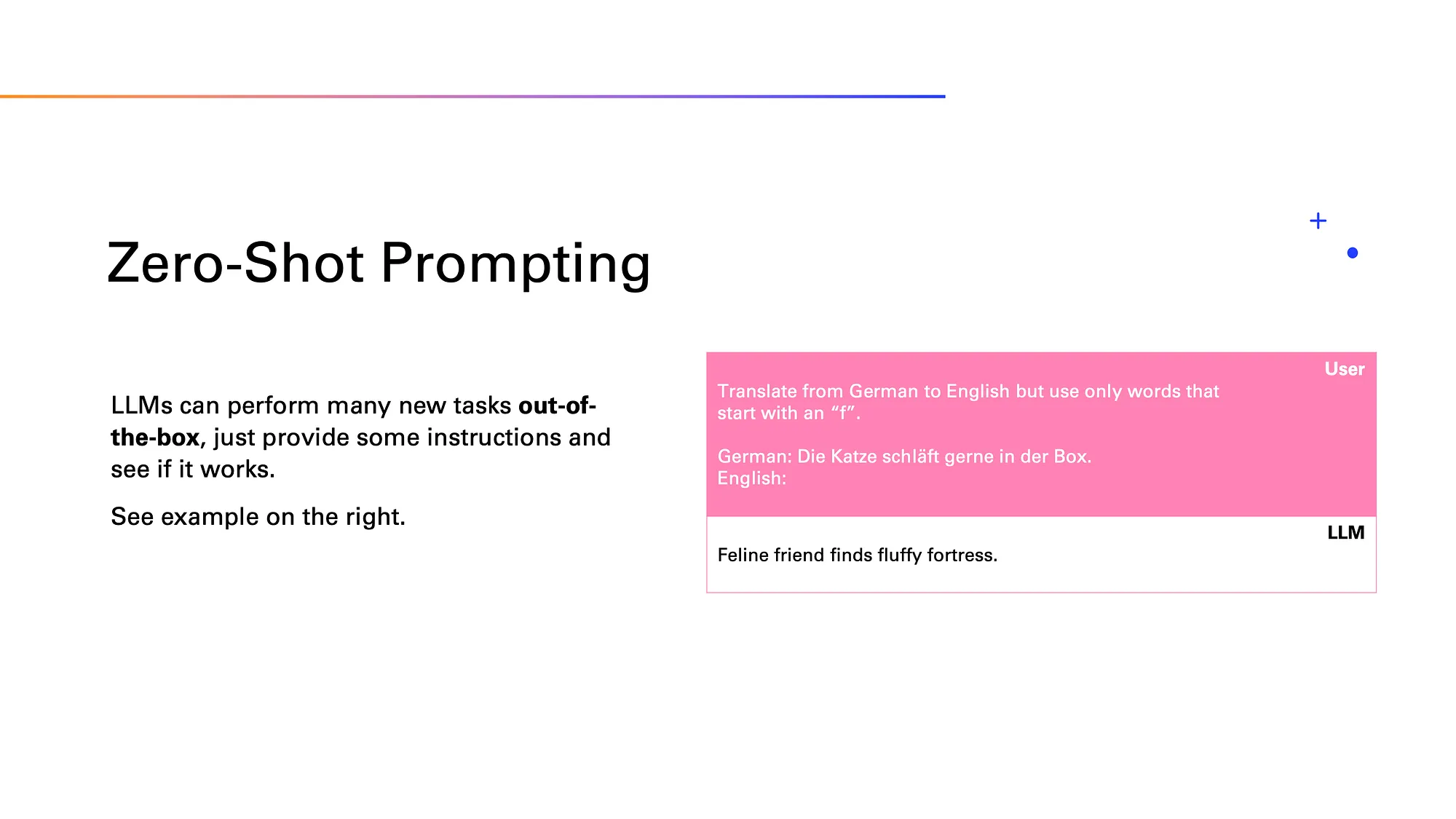

IV. FROM PROMPTING GPTs TO ASKING AGENTS

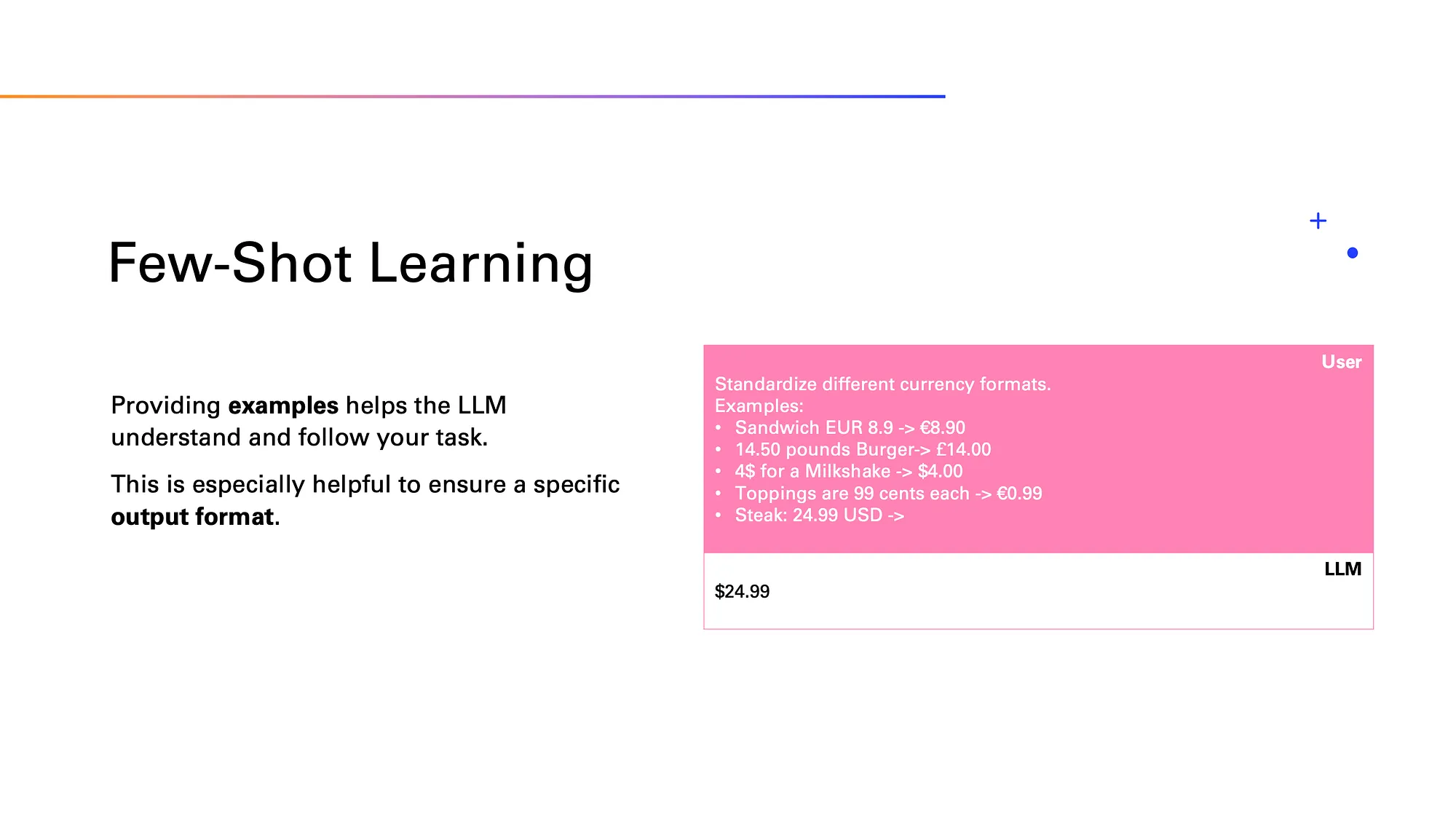

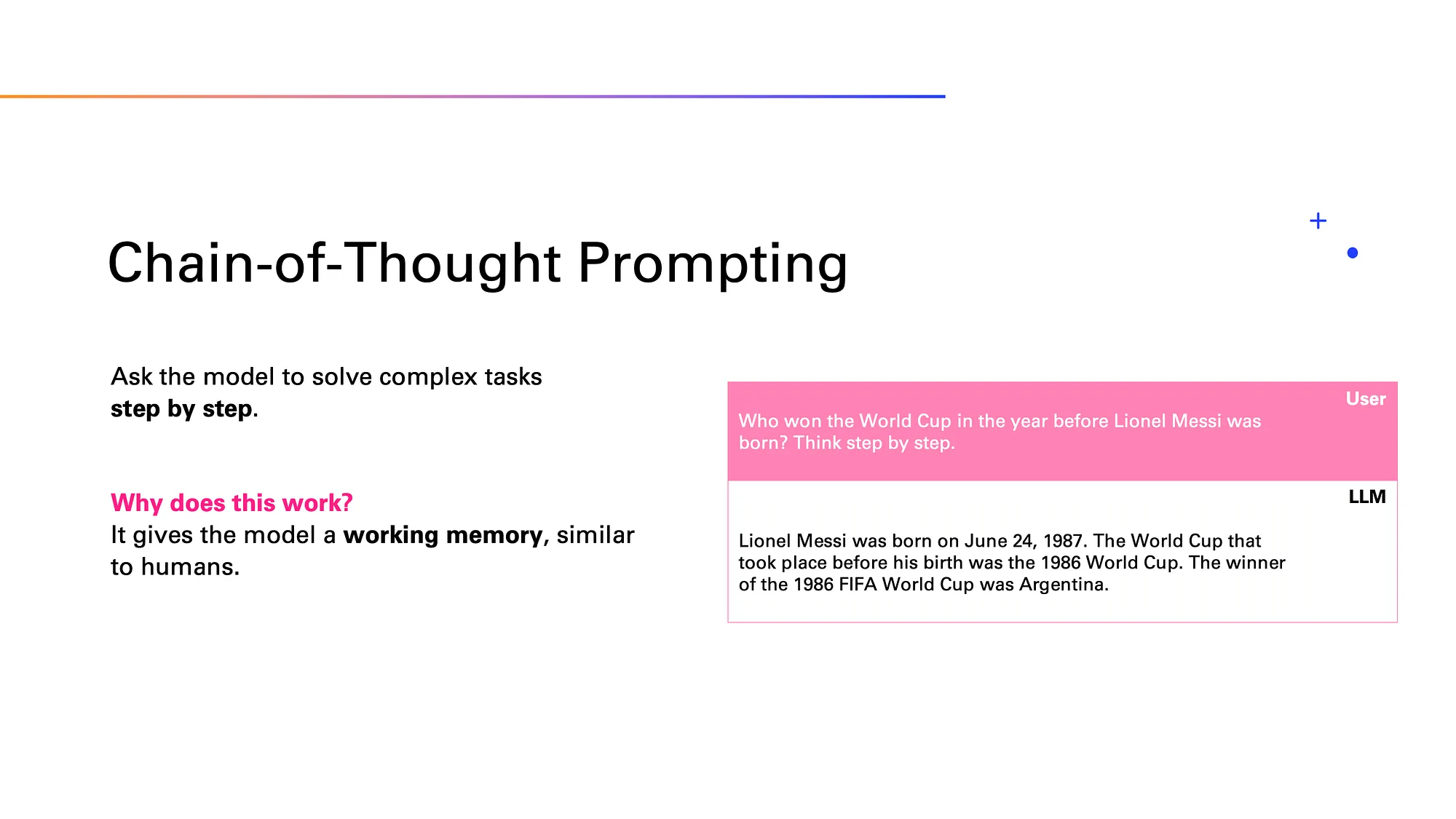

Types of prompting

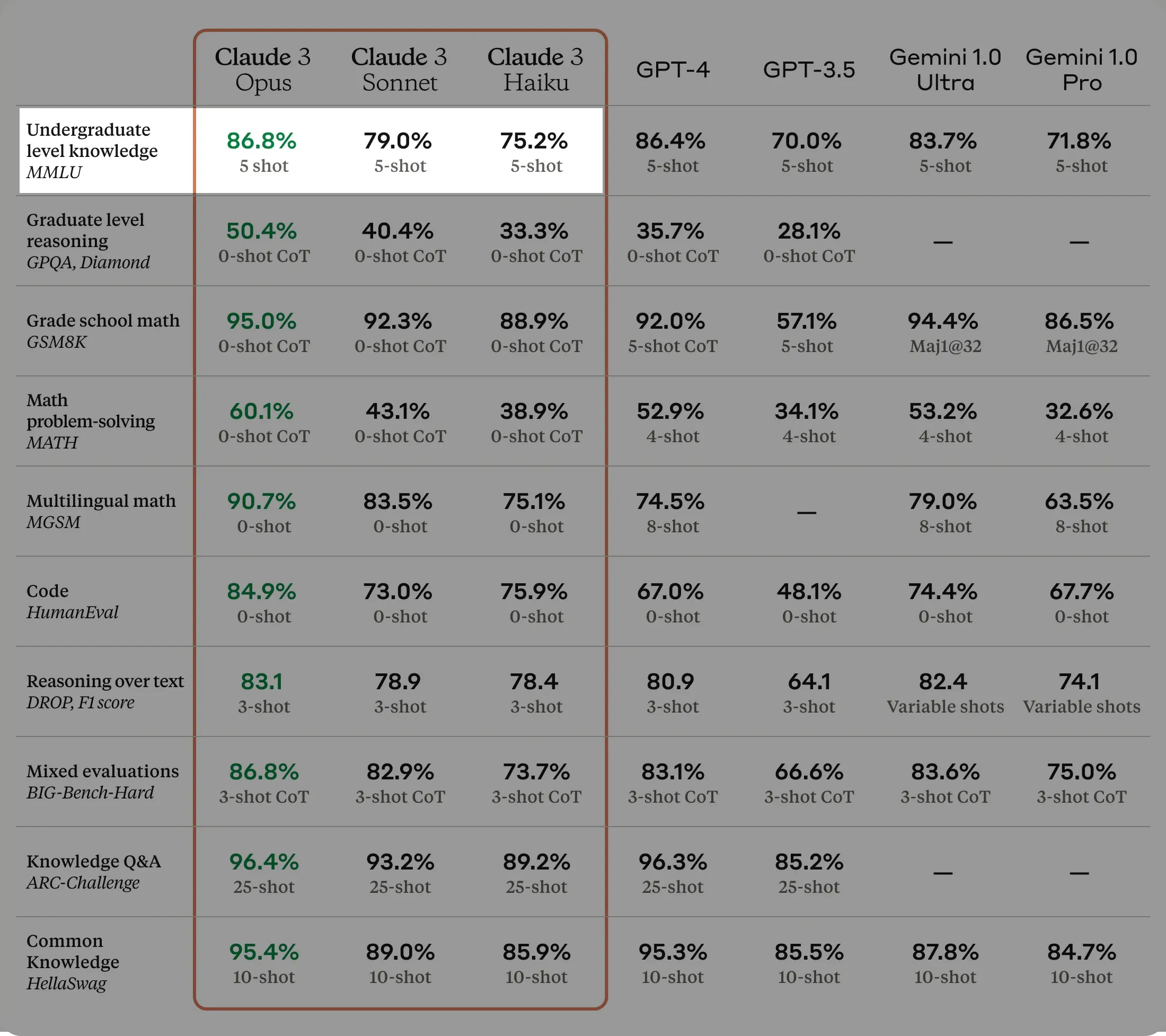

Varying performance by prompt type

Example using MMLU (Massive Multitask Lang. Understanding):

Figure 19: Anthropic - “Introducing Claude 3”

5-shot learning in Business Analytics2

Q1: What is a p-value in statistical testing?

A: A measure indicating the probability of obtaining test results at least as extreme as the observed results, assuming the null hypothesis is true.

Q2: What is the difference between supervised and unsupervised learning?

A: Supervised learning uses labeled data with known outcomes, while unsupervised learning finds patterns in unlabeled data.

Q3: What is multicollinearity in regression analysis?

A: A condition where two or more independent variables in a regression model are highly correlated with each other.

Q4: What is the purpose of cross-validation?

A: A technique to assess how well a model will generalize to new, unseen data by testing it on multiple subsets of the training data.

Q5: What is feature scaling?

A: The process of normalizing numerical features to a similar range to improve model performance and training stability.

Actual Question: What is overfitting in machine learning?5-shot CoT learning in Bus. Analytics3

Q1: What is a p-value in statistical testing?

Reasoning:

1. Start with understanding hypothesis testing framework

2. Consider the null hypothesis assumption

3. Collect and analyze experimental data

4. Calculate probability of extreme results

5. Compare to significance level for decision making

A: A measure indicating the probability of obtaining test results at least as extreme as the observed results, assuming the null hypothesis is true.

Q2: What is the difference between supervised and unsupervised learning?

Reasoning:

1. Consider the input data structure

2. Analyze presence/absence of labels

3. Examine learning objectives

4. Compare training processes

5. Evaluate output types

A: Supervised learning uses labeled data with known outcomes, while unsupervised learning finds patterns in unlabeled data.

Q3: What is multicollinearity in regression analysis?

Reasoning:

1. Examine relationships between variables

2. Check correlation strengths

3. Assess impact on model stability

4. Consider variance inflation

5. Evaluate prediction accuracy

A: A condition where two or more independent variables in a regression model are highly correlated with each other.

Q4: What is the purpose of cross-validation?

Reasoning:

1. Consider model evaluation needs

2. Analyze data splitting strategy

3. Understand validation process

4. Examine performance metrics

5. Assess generalization capability

A: A technique to assess how well a model will generalize to new, unseen data by testing it on multiple subsets of the training data.

Q5: What is feature scaling?

Reasoning:

1. Analyze feature distributions

2. Identify scale differences

3. Consider model requirements

4. Evaluate scaling methods

5. Assess impact on performance

A: The process of normalizing numerical features to a similar range to improve model performance and training stability.

Actual Question: What is overfitting in machine learning?So, is it fair to say LLMs are just next token prediction?

Not quite. Here’s why:

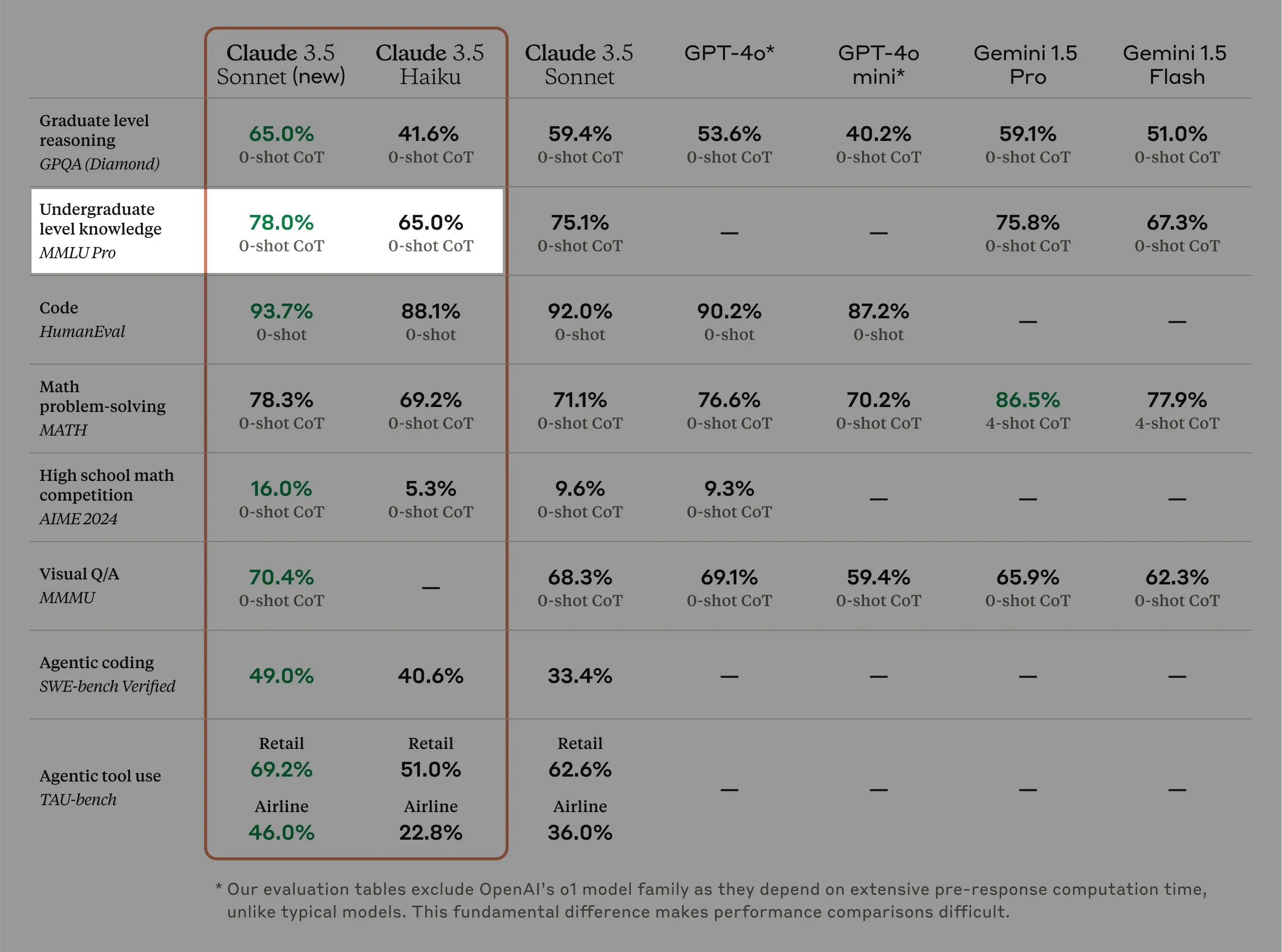

7 months later (October 22, 2024)

Figure 20: Anthropic - “Introducing Claude 3.5”

0-shot CoT learning in Bus. Analytics4

Q: What is overfitting in machine learning?

Let me break this down systematically:

1. First, let's understand the core problem

- Models aim to learn patterns from data

- Training involves finding relationships in data

2. Analyzing the learning process

- Model can learn both genuine patterns

- Model can also learn noise in training data

3. Understanding the consequences

- Perfect performance on training data

- Poor performance on new data

- Model fails to generalize

4. Identifying the symptoms

- High accuracy on training set

- Low accuracy on test set

- Complex model behavior

5. Considering the implications

- Model becomes too specialized

- Loses ability to make good predictions

- Acts like memorization instead of learning

Therefore: Overfitting is when a machine learning model learns the training data too precisely, including its noise and random fluctuations, resulting in poor generalization performance on new, unseen data.AGENTIC ERA:

PROMPT JUST ASK FOR RESULTS

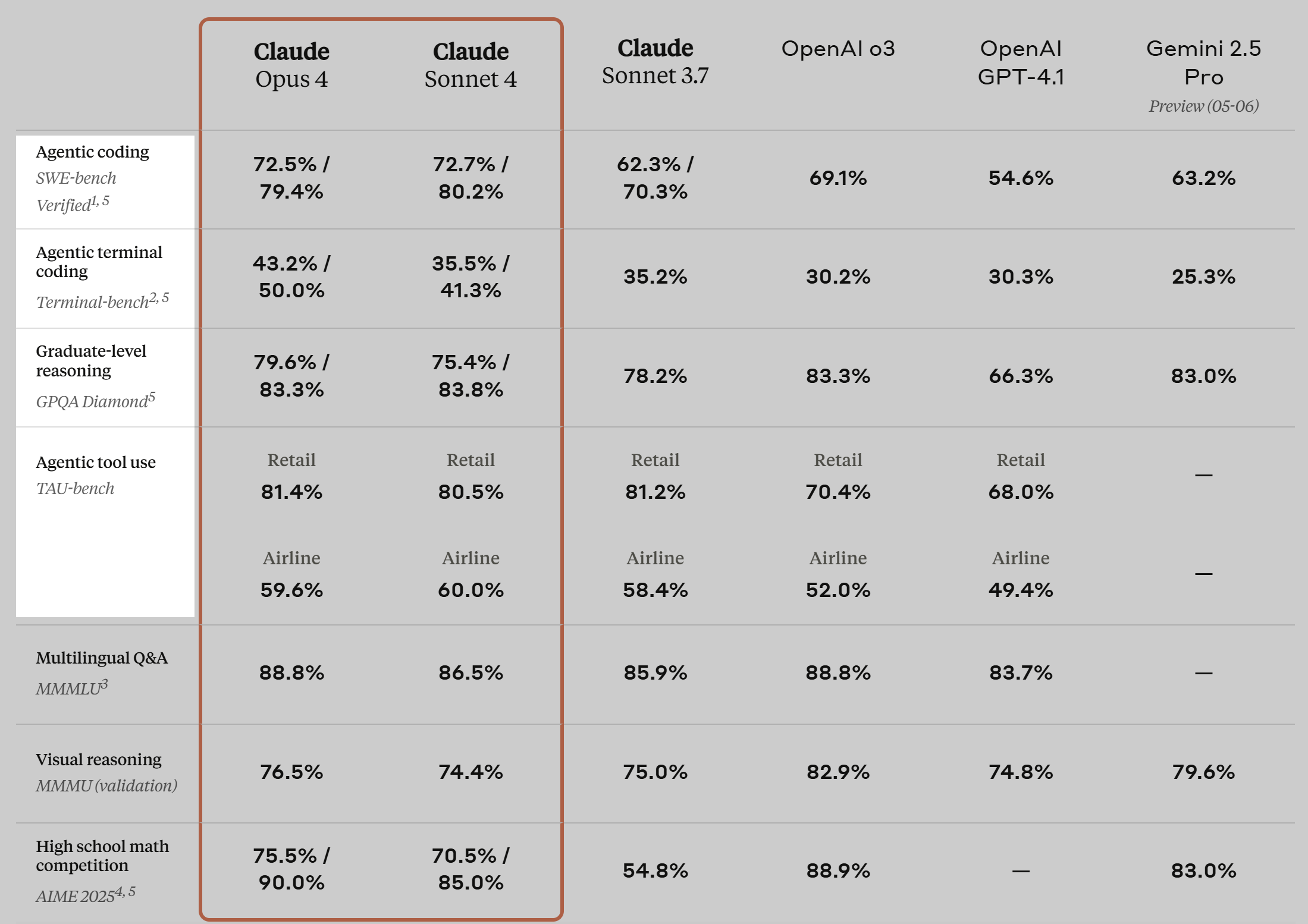

After another seven months (May 22, 2025), agentic tasks take center stage

Figure 21: Anthropic - “Introducing Claude 4”

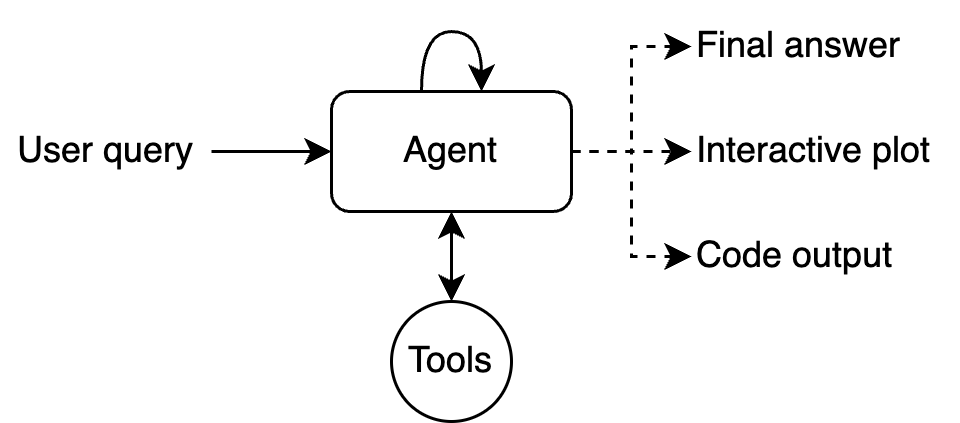

An agent

Figure 22: André Ferreira and Telmo Felgueira - “Building a Better AI Agent with Claude”

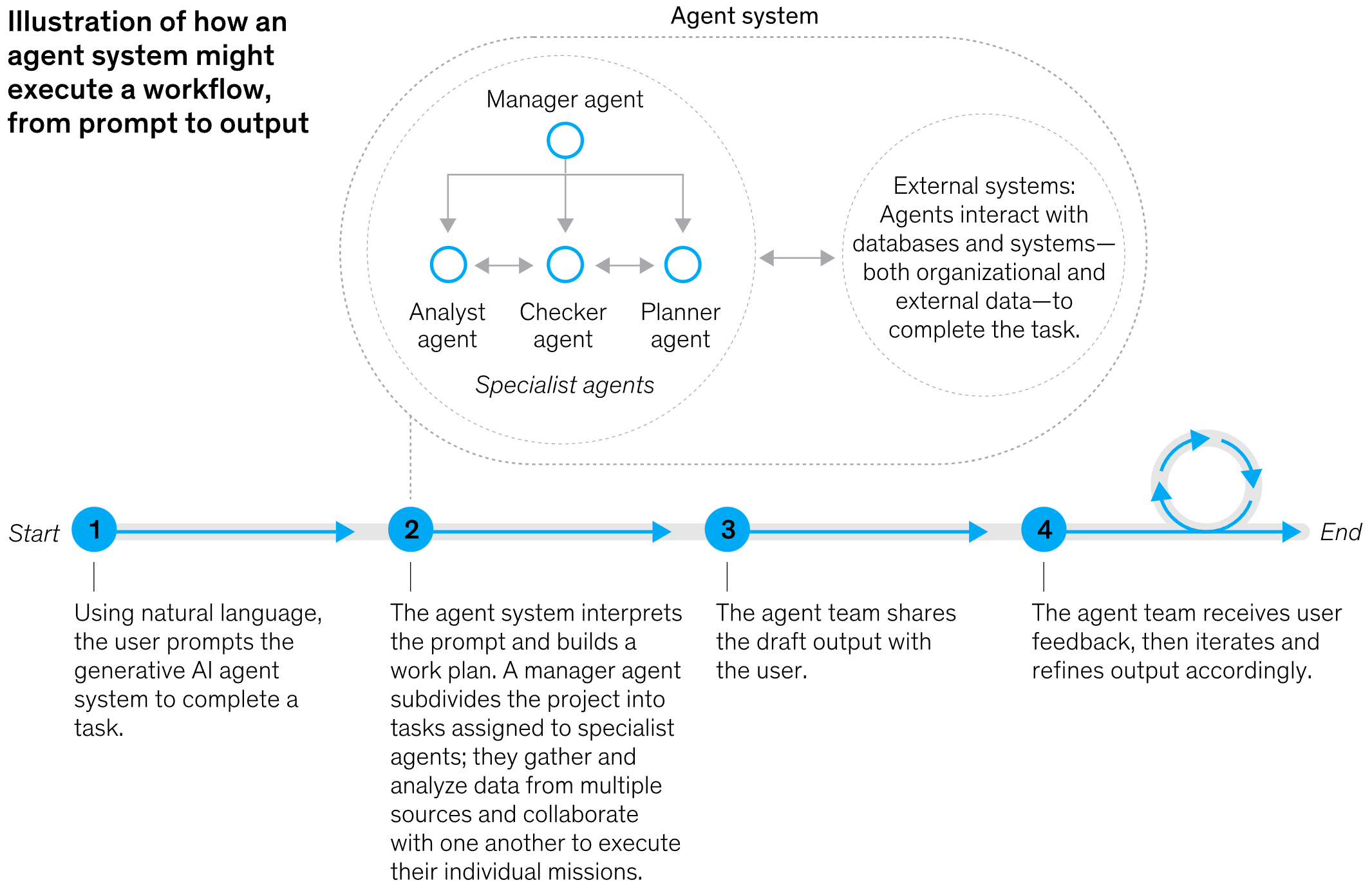

An agentic workflow

Figure 23: McKinsey - “Why agents are the next frontier of generative AI”

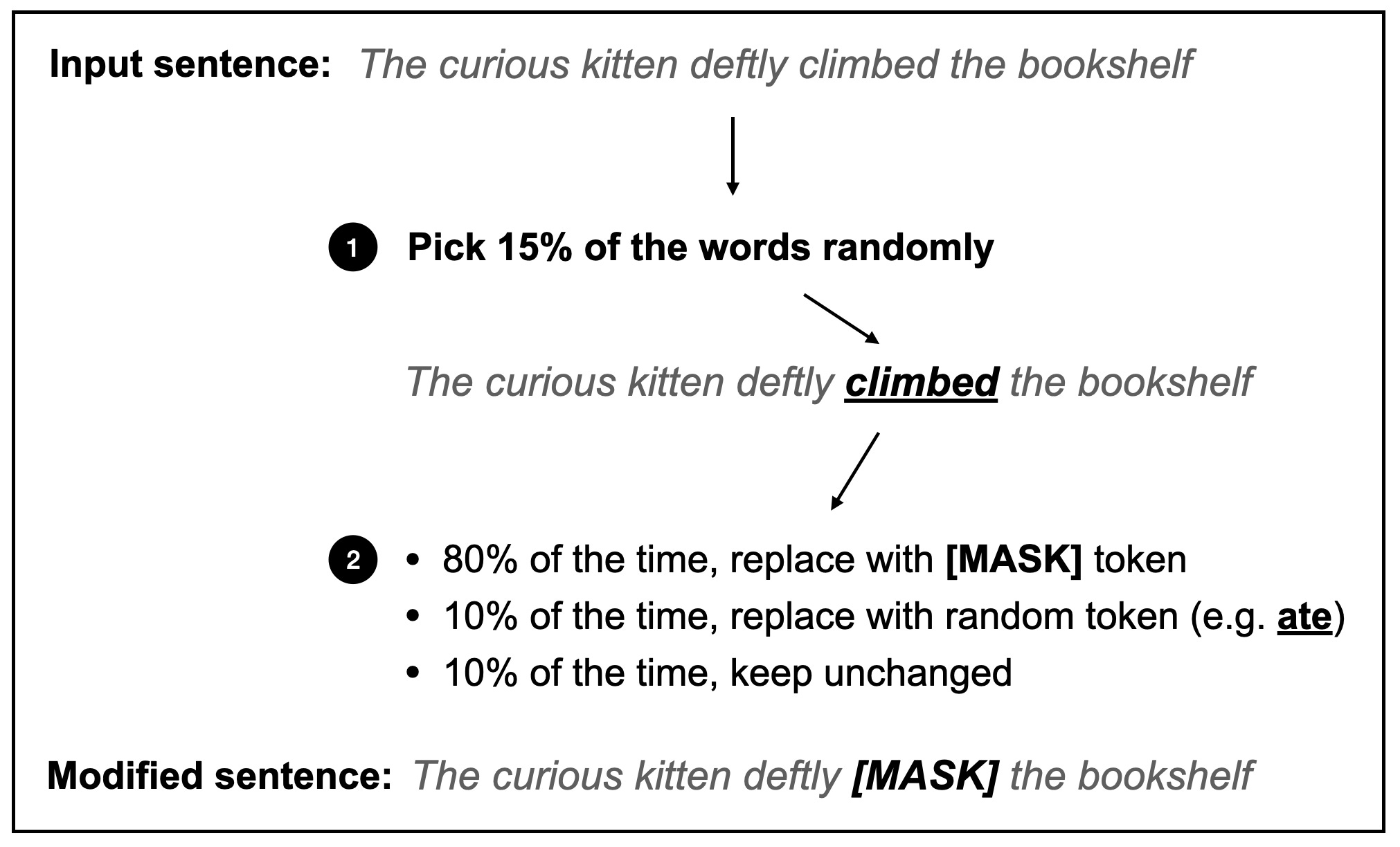

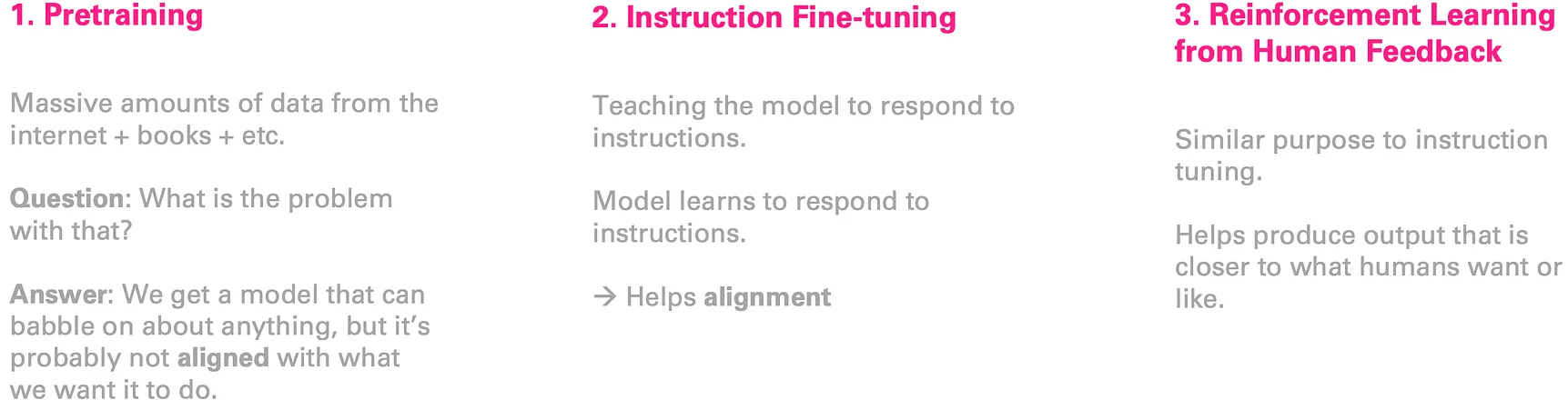

SIDE NOTE: HOW GPTs ARE TRAINED

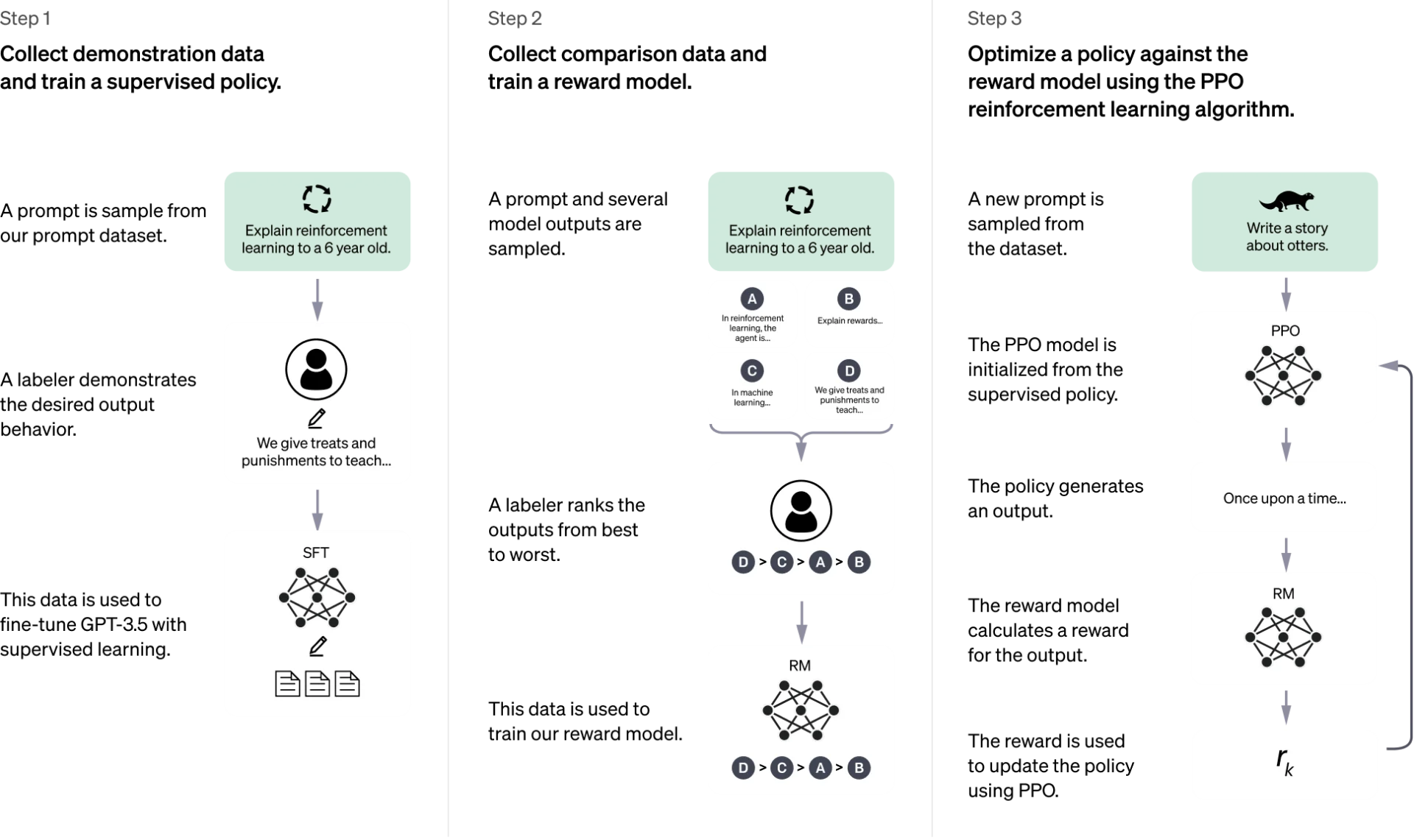

Phases of GPT’s training (1/2)

Phases of GPT’s training (2/2)

Figure 25: OpenAI - “Introducing ChatGPT”

“AI in 64 Pictures” – A visual journey

Gorkem Turgut (G.T.) Ozer - June, 2025

(Updated in July, 2025)

Footnotes

By Claude Sonnet 4’s count on VS Code.

Generated using Claude on Perplexity.

Generated using Claude on Perplexity.

Generated using Claude on Perplexity.